Advisory Board “User Research 101” presentation

This is the “User Research 101” presentation I wrote and delivered to executives, product managers, and developers within The Advisory Board Company - Crimson, to evangelize the benefits their teams and products could see by engaging with the then-new User Research department and “leveling up” their research and design practices with additional rigor.

Details about the presentation, including full references, are in “Evangelizing the business value of user research (especially in enterprise software teams).”

Our then-product manager, Olivia Hayes, provides her own take and background of this talk, in “Using User Research — An Enterprise Software Love Story.”

This presentation is lightly redacted from the original, and is presented as a “hypertranscript,” with the ~40 minutes of audio synced to the slides and text. Click on any word or slide to advance or rewind to that position, and click on any whitespace to play or pause.

- Introduction (~2m)

- Tell them what you’re going to tell them (~1m)

- User Research knows product management is hard (~3m)

- User Research can take on some of that work (~3m)

- How User Research works and the value you see (~10m)

- Examples of User Research integrating into existing processes (~15m)

- Tell them what you told them (~1m)

- Closing (~3m)

¶ 1

¶ 1

Hi, welcome to User Research 101. We have a lot to cover but, first, a few points of order.

In front of you, you should have a pen, a stack of index cards and a feedback form. Please write your name, your title, and the product or products you work on, on an index card and then turn that card over. As you have questions during the talk, write down the slide number (found in the lower right) and your question on a fresh index card and then add it to the pile. We’ll have time for questions at the end but we’ll also collect all of these cards and reply to them via email. If you’re dialing in, start a new email to me with the same. As Q&A starts, we’ll ask you to fill out your feedback forms. We’ll be collecting these at the door before you leave so make sure you’ve completed yours. If you’re dialing in you’ll see a link to an online survey in the GoToMeeting chat you can use.

Erin, tell them what we’re going be talking about today.

¶ 2

¶ 2

(Erin Howard, then our director, was asked to intro the talk as more and more executives started showing up to them.)

¶ 3

¶ 3

(Now, these things might not seem like traditional functions of user research, but these were the business functions that we could provide value in from a business perspective. So, we see that user experience in a lot of organizations is a function of what the organization is missing and these were the things that individual product teams were often missing, that we discovered.)

¶ 4

¶ 4

(She would turn it back over to me and I would say:)

¶ 5

¶ 5

Any questions before we start?

(And then we would start.)

¶ 6

¶ 6

So, I’m going to talk to you today about how User Research knows product management is hard.

¶ 7

¶ 7

I’m going to tell you about how User Research can take on some of that work.

¶ 8

¶ 8

I’m going to describe how User Research works and the value you’ll see from it.

¶ 9

¶ 9

And, I’m going to give you examples of User Research integrating into existing processes, so that you know that if it works in all of these processes, it can work in whatever process you your team currently have.

¶ 10

¶ 10

Let’s start with “User Research knows product management is hard.” Developers and development managers: don’t tune out. Most of this applies to you as well. But, we expected more PM’s to turn out than devs.

¶ 11

¶ 11

You have so many inputs.

We’ve all been on projects where the highest paid person’s opinion defined what would ship. We’ve all been on projects where the angriest customer defined what would ship. We’ve all been on projects where we simply built the next thing in the backlog without knowing requirements, or who put it there, or who asked for it, or why.

And, we know that creating a reasonable roadmap, a successful roadmap, a comprehensive roadmap in the face of all of these things is hard, especially when company or customer politics show up. And, we know that convincing everyone that sticking to your plan as the right one in the face of these conflicts can be even harder than putting it together in the first place.

But, I heard something a while back that made me realize that not everyone has this problem in the same way that we do. During a company all-hands, an executive was asked how they decided what our internal KPIs should be, and how did they know that they were right?

You know what they said?

¶ 12

¶ 12

“I made them up.”

¶ 13

¶ 13

And, it turns out that happens a lot, in a lot of circumstances. Simon Bennett gave a great talk about this recently, including the psychology of rationalizing decisions. In the absence of data, opinion and intuition and fabrication and, yes, company politics take the day.

And, that executive can more safely make decisions on the spot than many of us can, because they have not only the responsibility and accountability, but also the authority to.

¶ 14

¶ 14

That executive has the power to define what counts as knowledge and rational behavior.

¶ 15

¶ 15

An everyday PM doesn’t have that authority. Your developers report up through engineering. Your services people and your sales people and your client relations people? They all report up through their own groups.

¶ 16

¶ 16

You get your job done through your relationships, by putting all of these pieces together, trying to get the right balance of compelling…

¶ 17

¶ 17

…and achievable.

And, that relationship includes the one with the company itself, making sure you’ve evangelized your roadmap upwards enough: tied to executive goals and company pillars and values. It’s not that you can’t have your own ideas and goals, just that there’s an element of needing to subsume them, to tie them to other initiatives, to sell them as part of something greater.

¶ 18

¶ 18

So, for you, we want User Research to mean:

- that you don’t have to make things up,

- that you can have data to hold up against others’ opinions, and

- that you can be more confident that you’re making the right opportunity-cost tradeoffs: that you’re having your developers and designers work on the best of all possible things; that you’re serving the most customers instead of just the one, lone, angriest customer.

¶ 19

¶ 19

I’m going to tell you how User Research can take on some of that work.

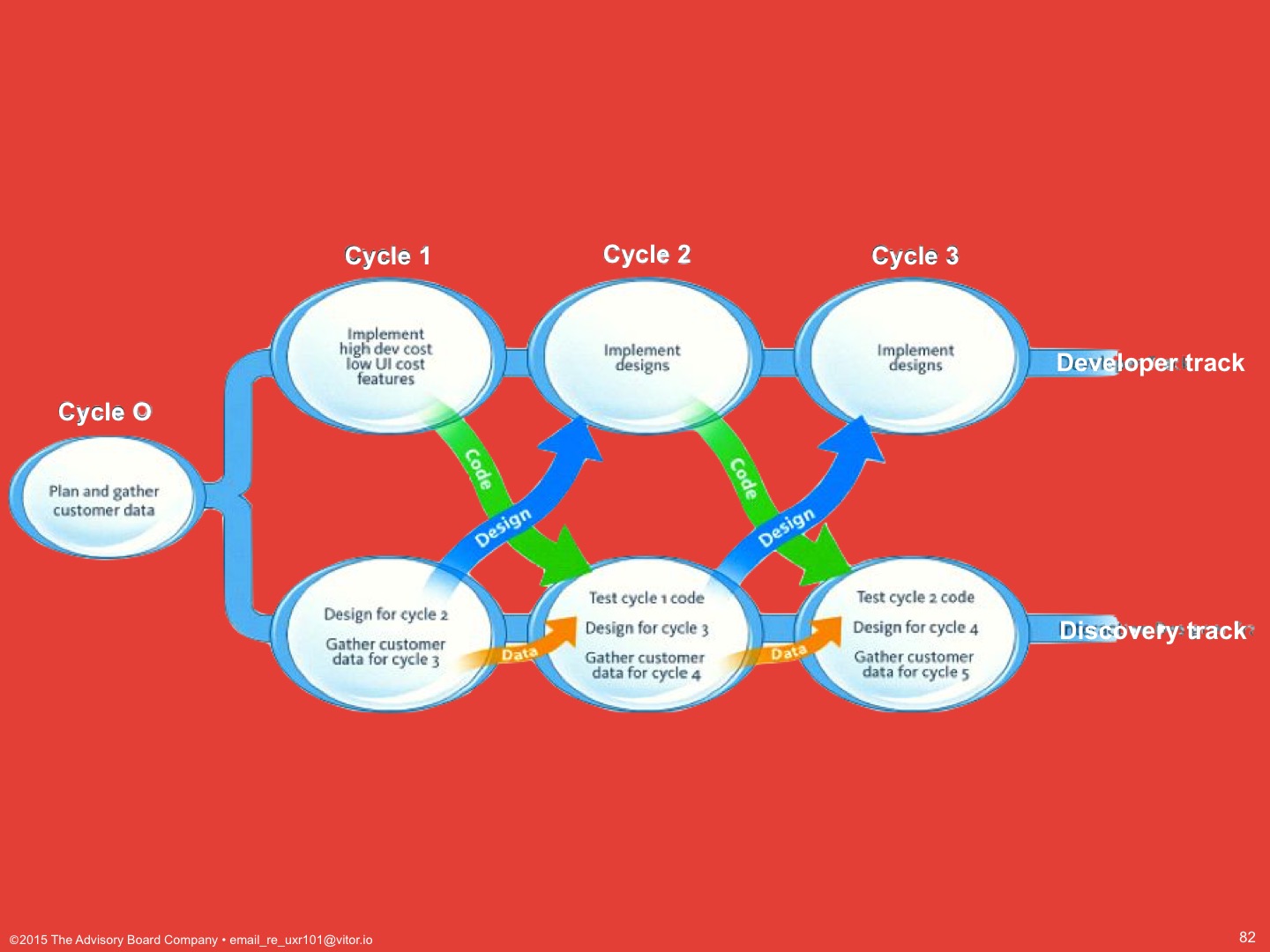

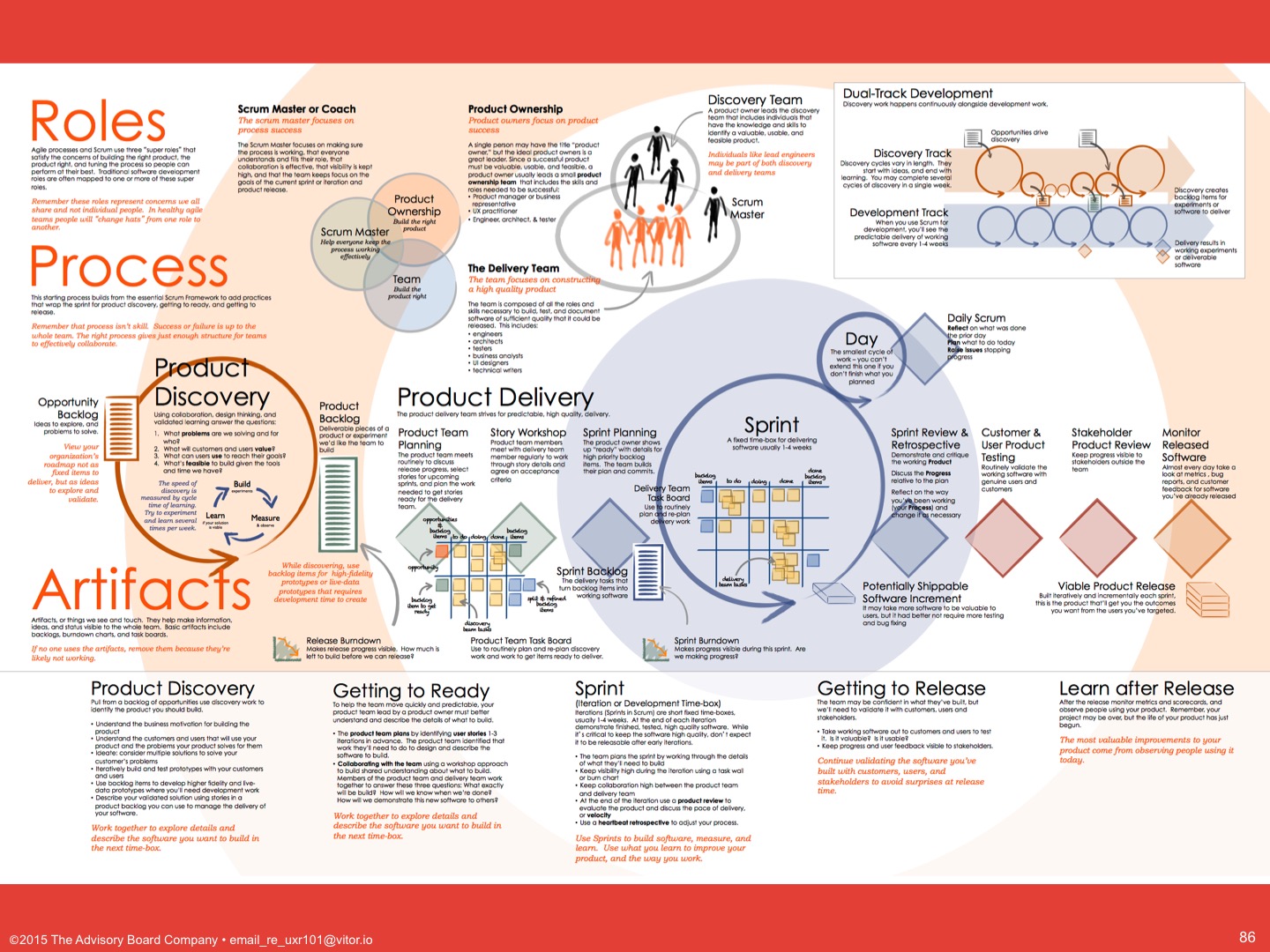

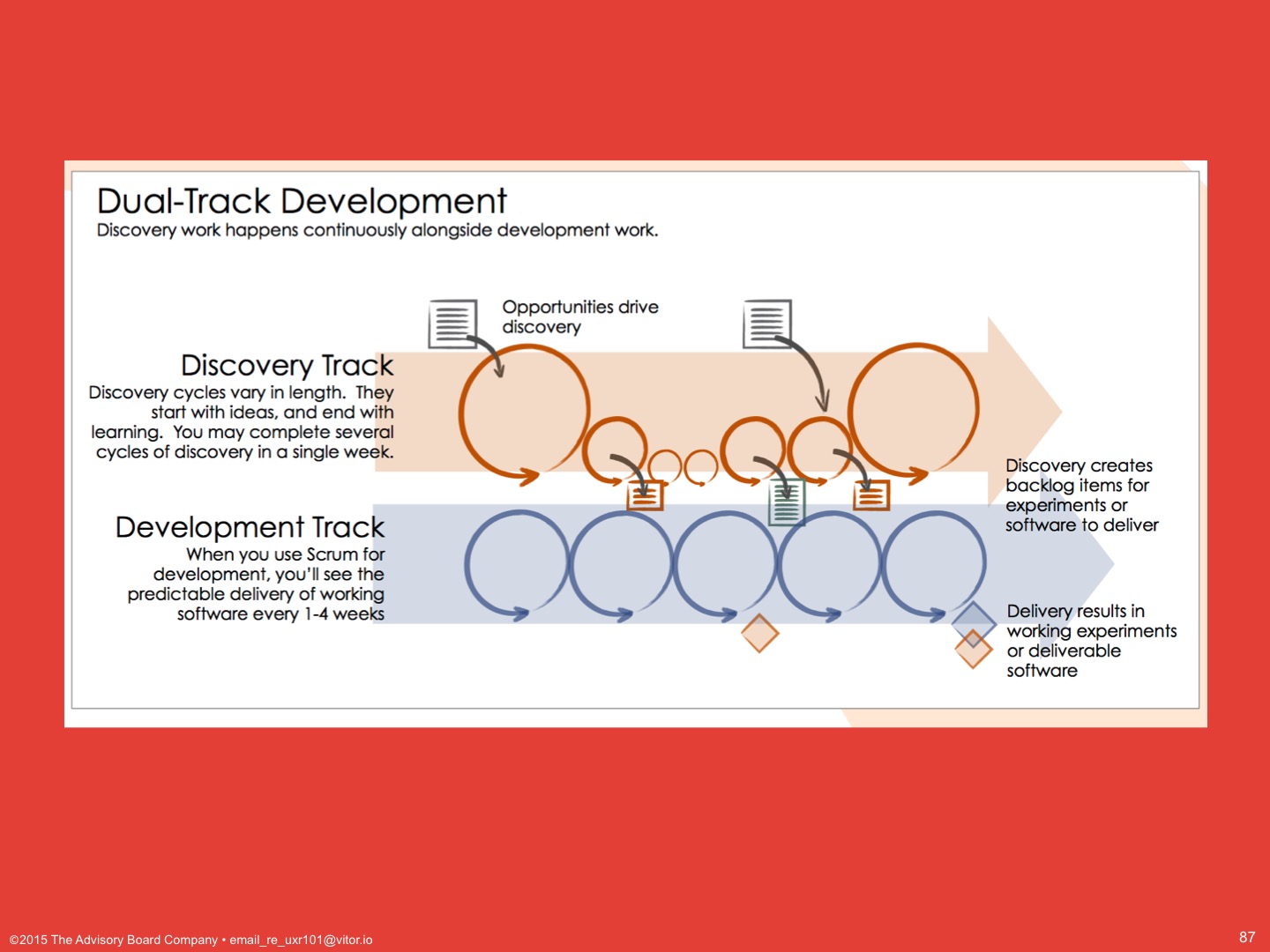

¶ 20

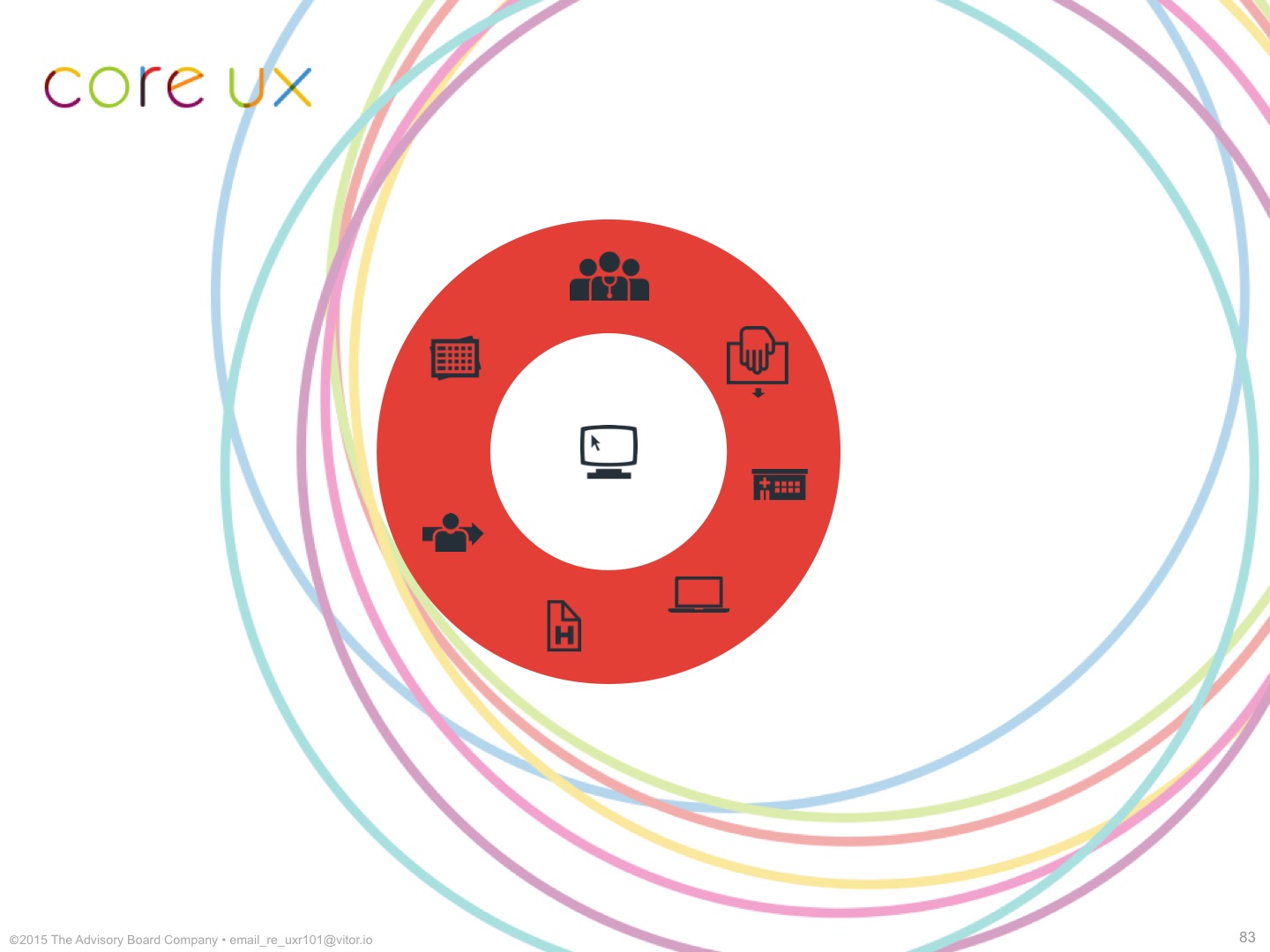

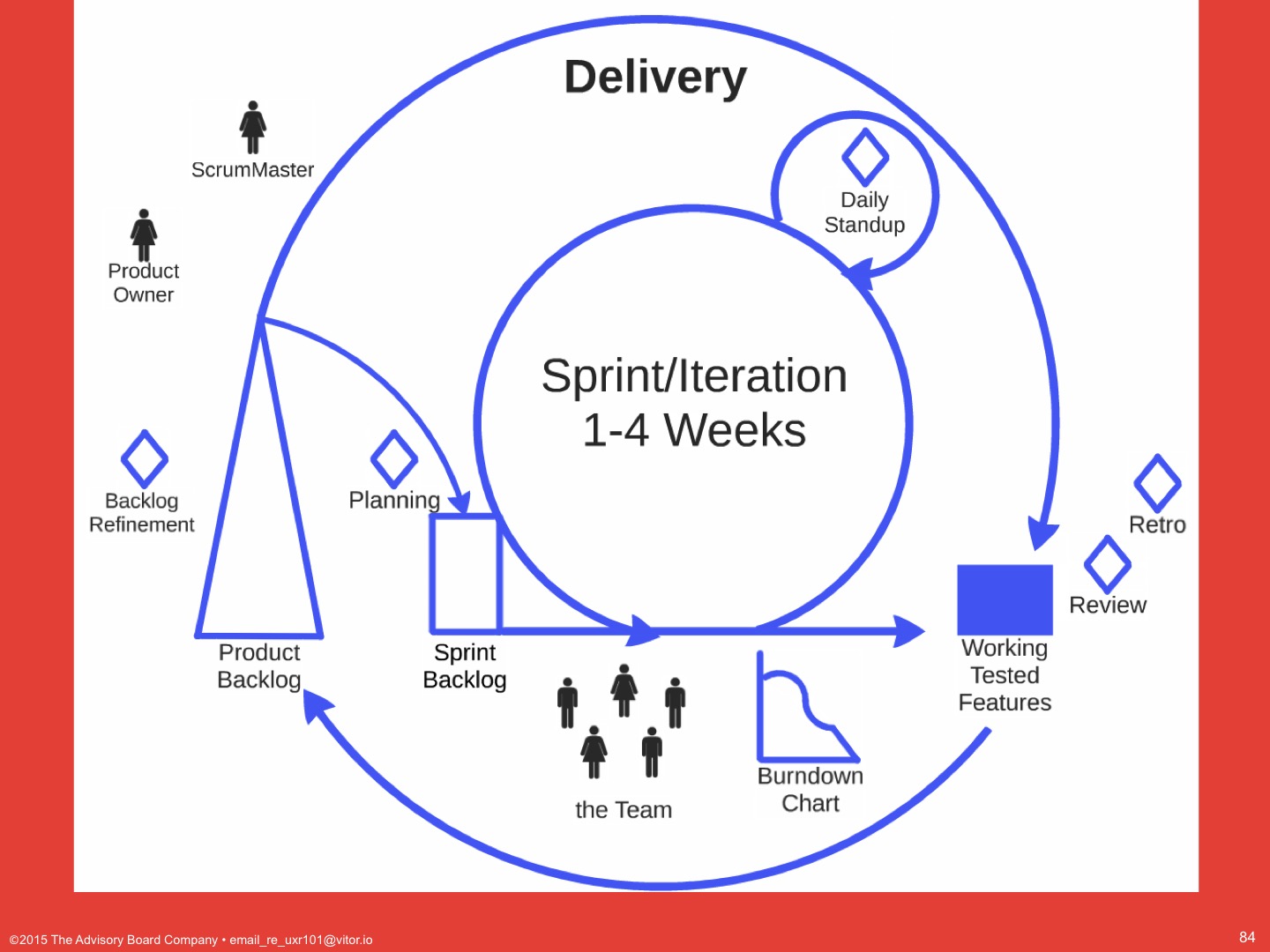

¶ 20

We know that managers can feel accountable to sales, or high-profile customers, or to customer retention agents, or others of these outside forces seeking to make you compromise your roadmap.

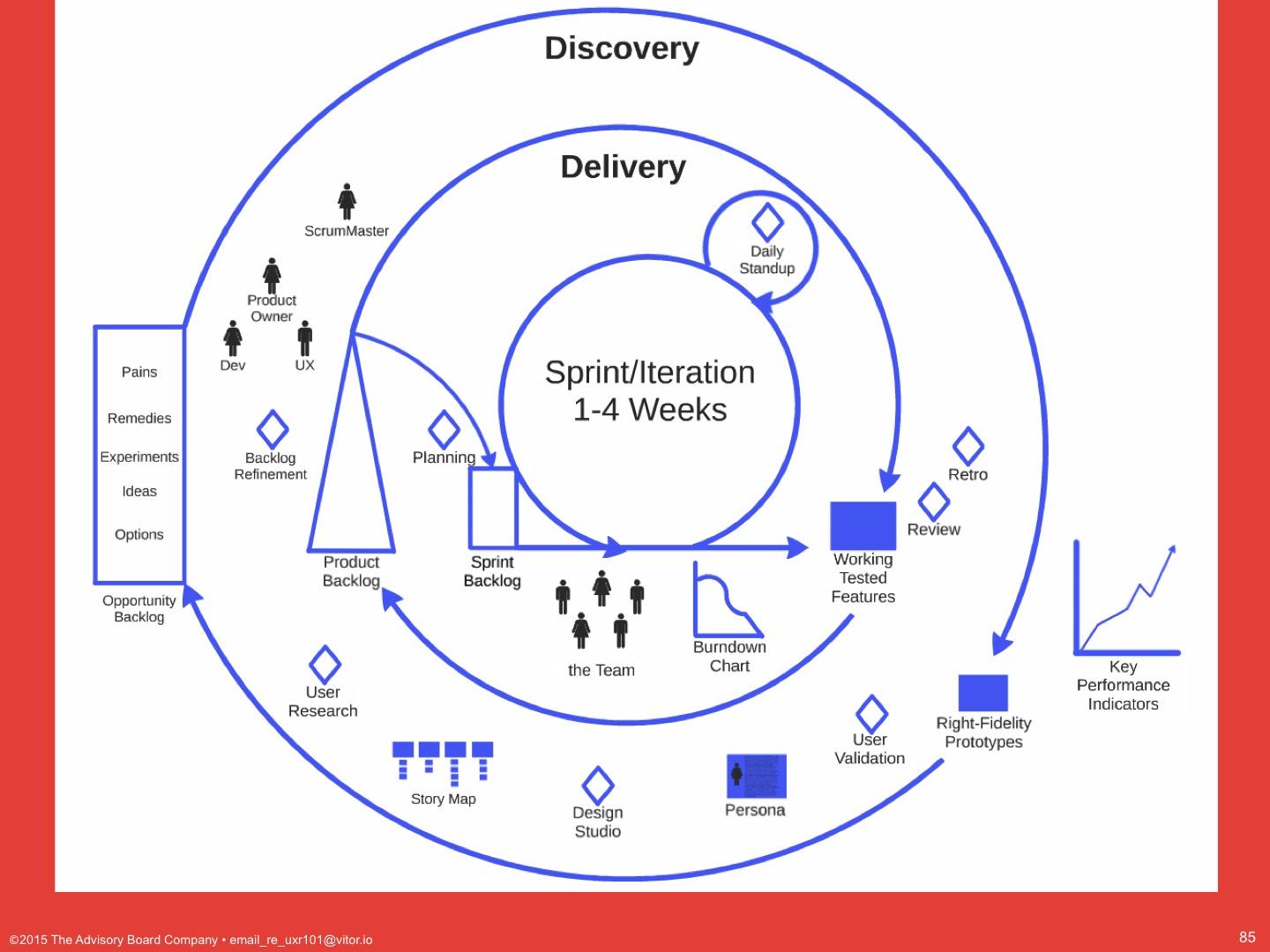

User Research can help you change the conversation to being accountable to the users by providing qualitative and quantitative data to support the opportunity-cost tradeoffs that you want to make.

¶ 21

¶ 21

We know that managers need to maintain good internal and external relationships. We can take one of those relationships off your hands.

User Research can perform the business function of maintaining relationships with your users so you can focus on evangelizing your (hopefully research-backed) roadmap to your team and upward.

¶ 22

¶ 22

We know that teams can suffer attrition and management above you can change.

And, so, User Research can perform the business function of knowledge management and transfer, to organize, preserve and teach what you and your team has learned about its customers, through attrition or organizational change.

We can work with you to conduct the research, present it, apply it and then re-teach it to your team as your team changes.

¶ 23

¶ 23

Finally, User Research can help inform your decisions throughout your product lifecycle. These are the more traditional types of research activities you probably expected to hear about today.

Now, I don’t know what your particular processes are, so I’m going to show you four examples from industry and we can talk about your specific ones in Q&A later.

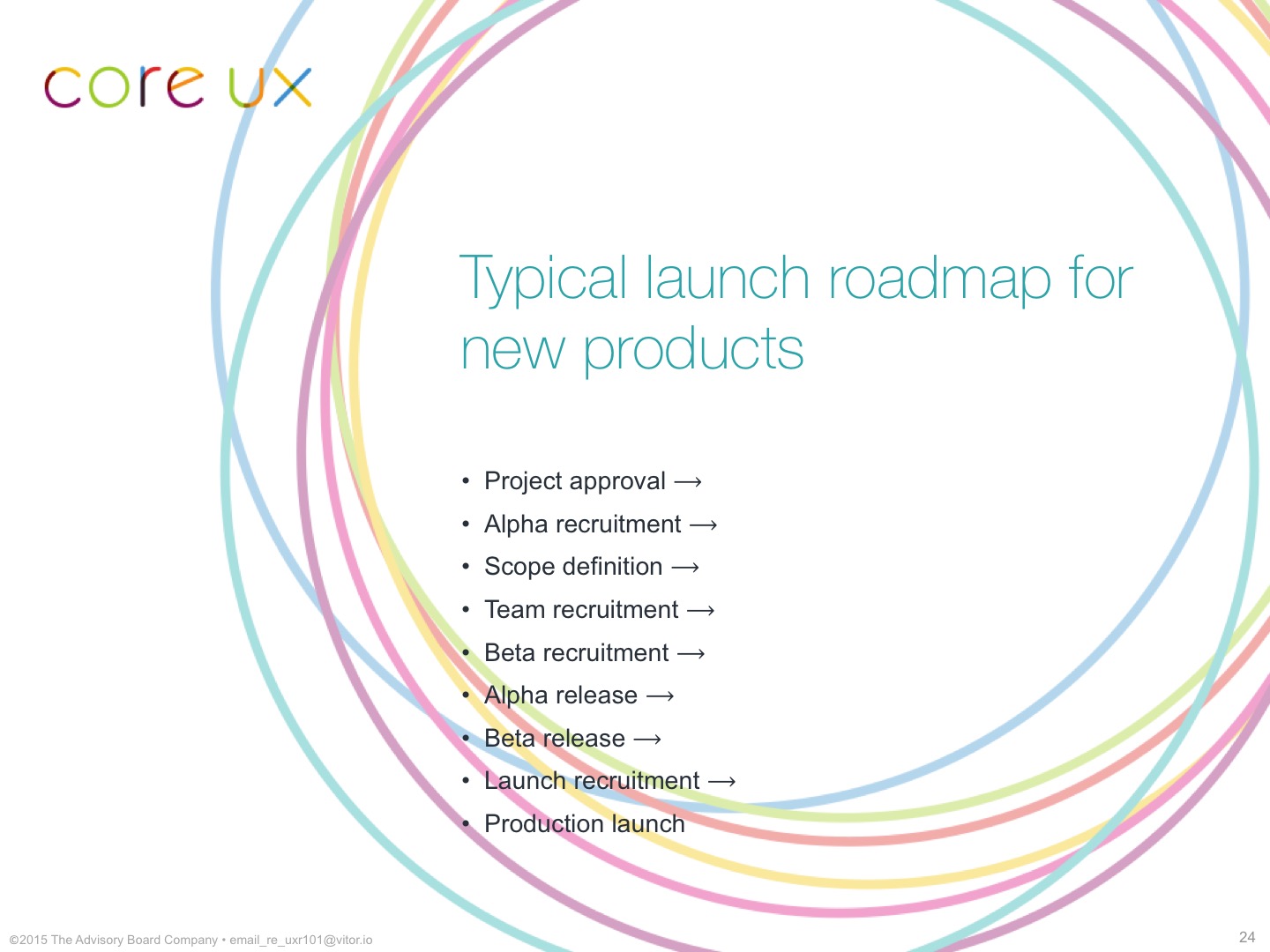

¶ 24

¶ 24

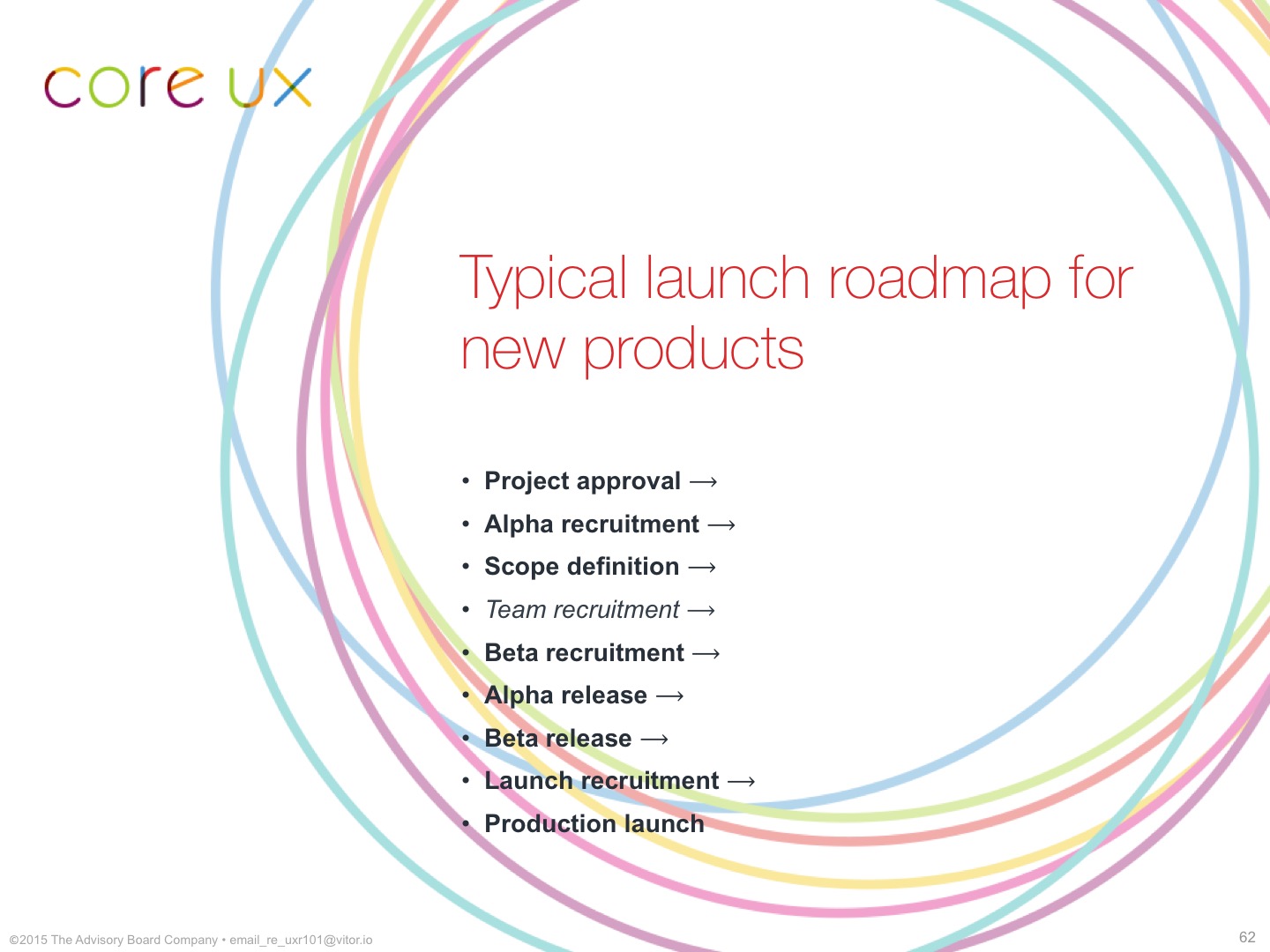

This is a typical launch roadmap for new products and many of our products followed this roadmap when they launched.

These are oriented around a development team’s efforts, but let me highlight the parts where User Research can provide insight.

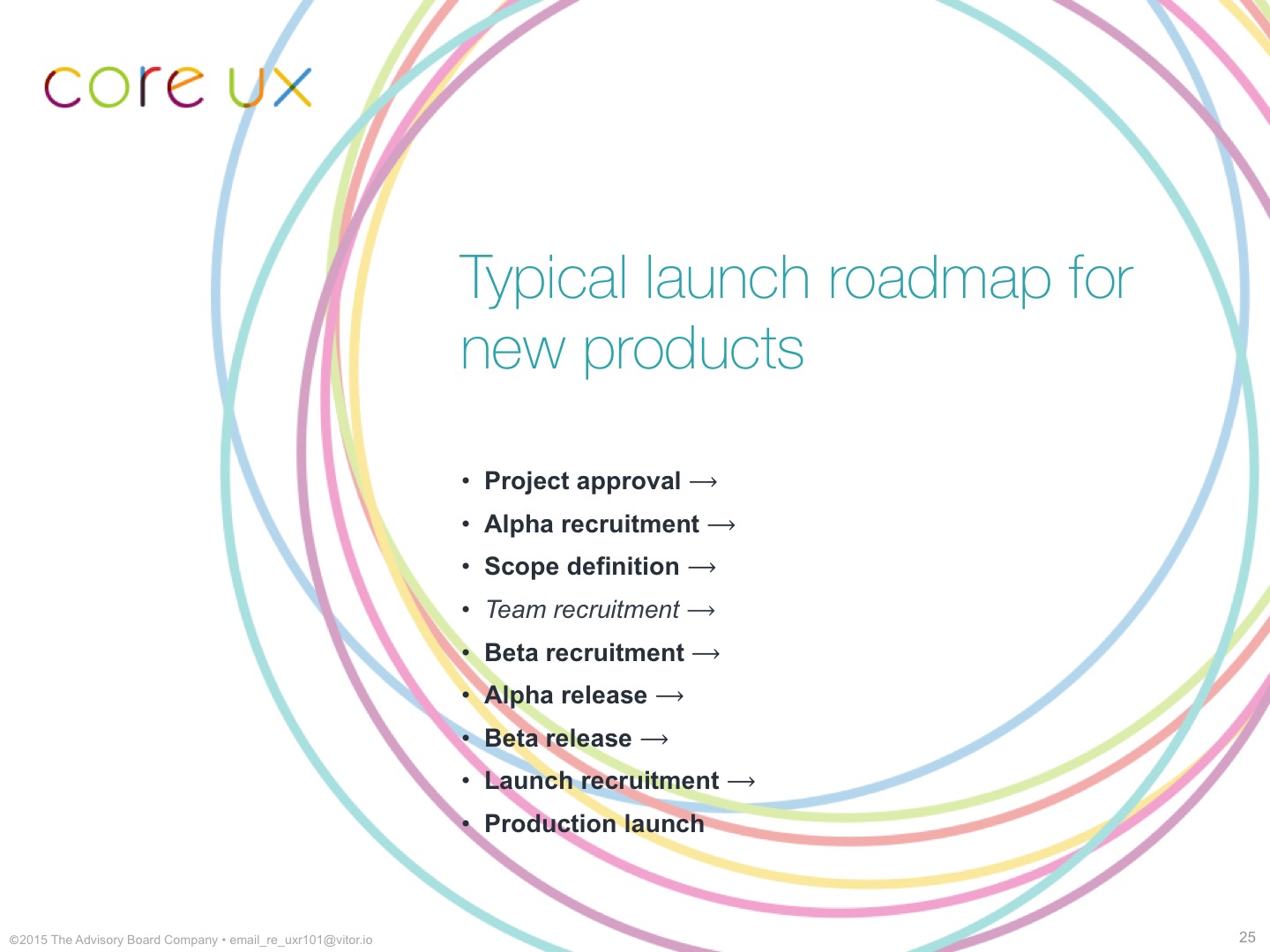

¶ 25

¶ 25

We’ll look at this in more detail later, but User Research can inform every stage in the roadmap, except for the part where you’re recruiting your internal product team.

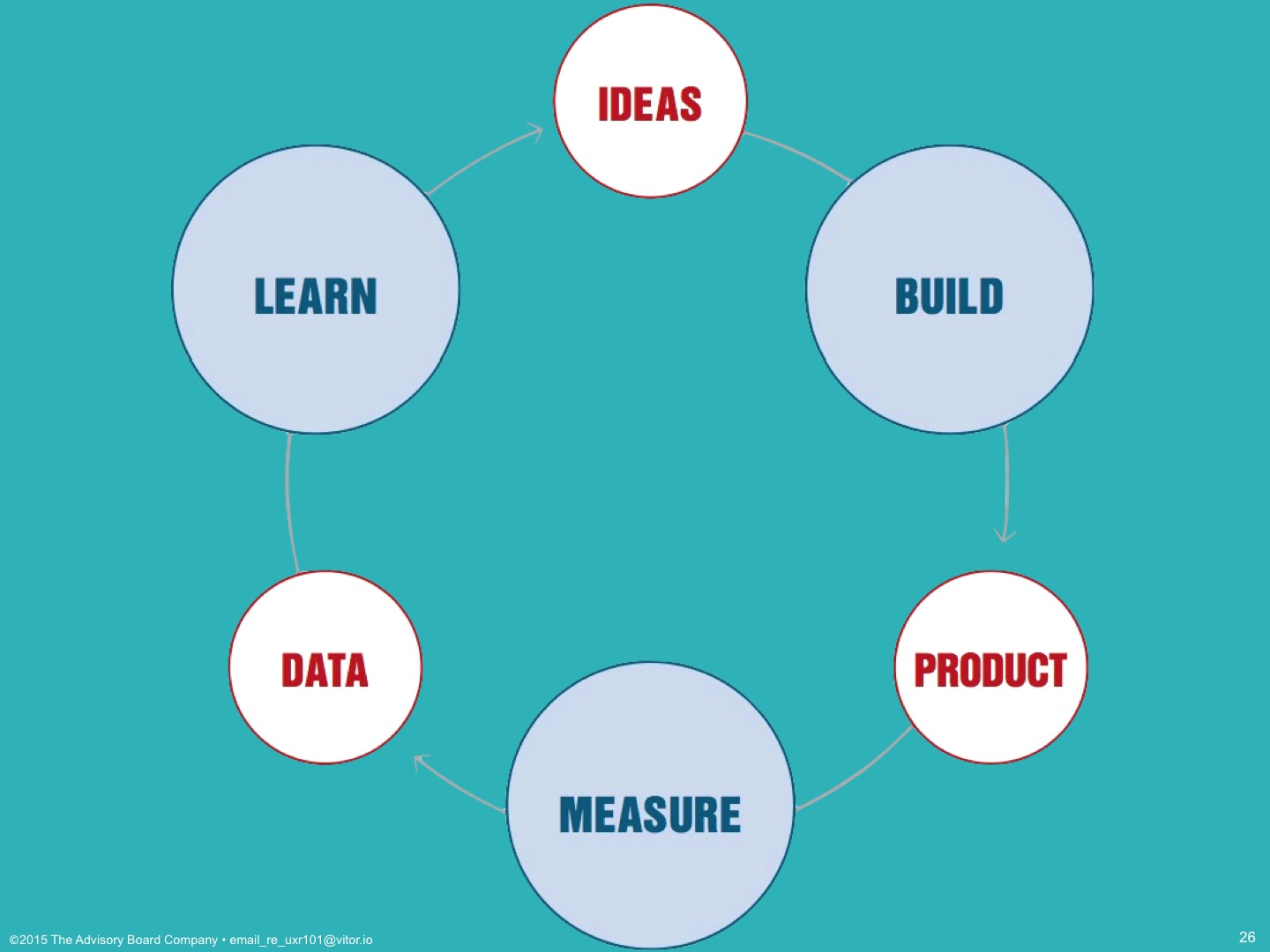

¶ 26

¶ 26

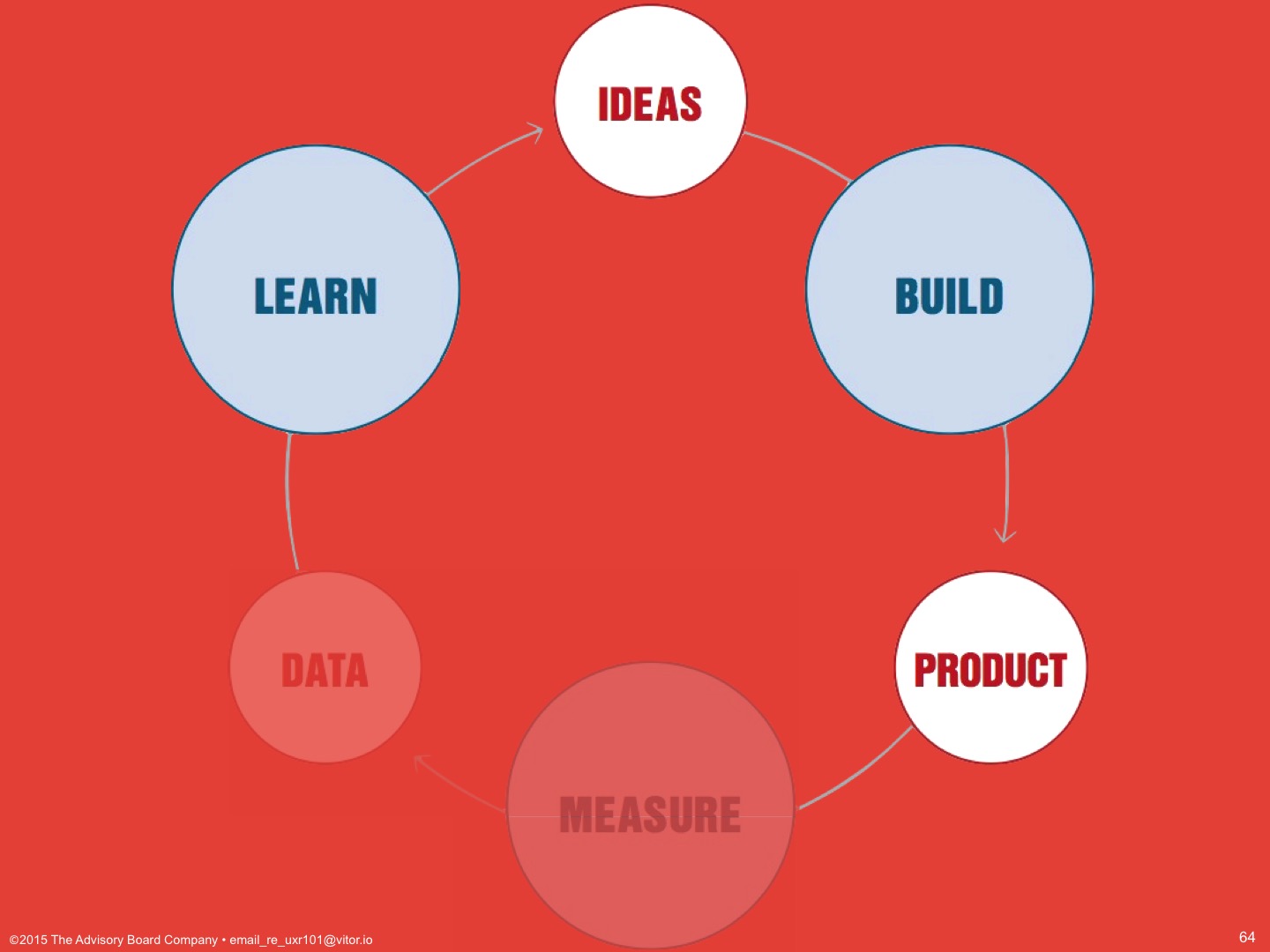

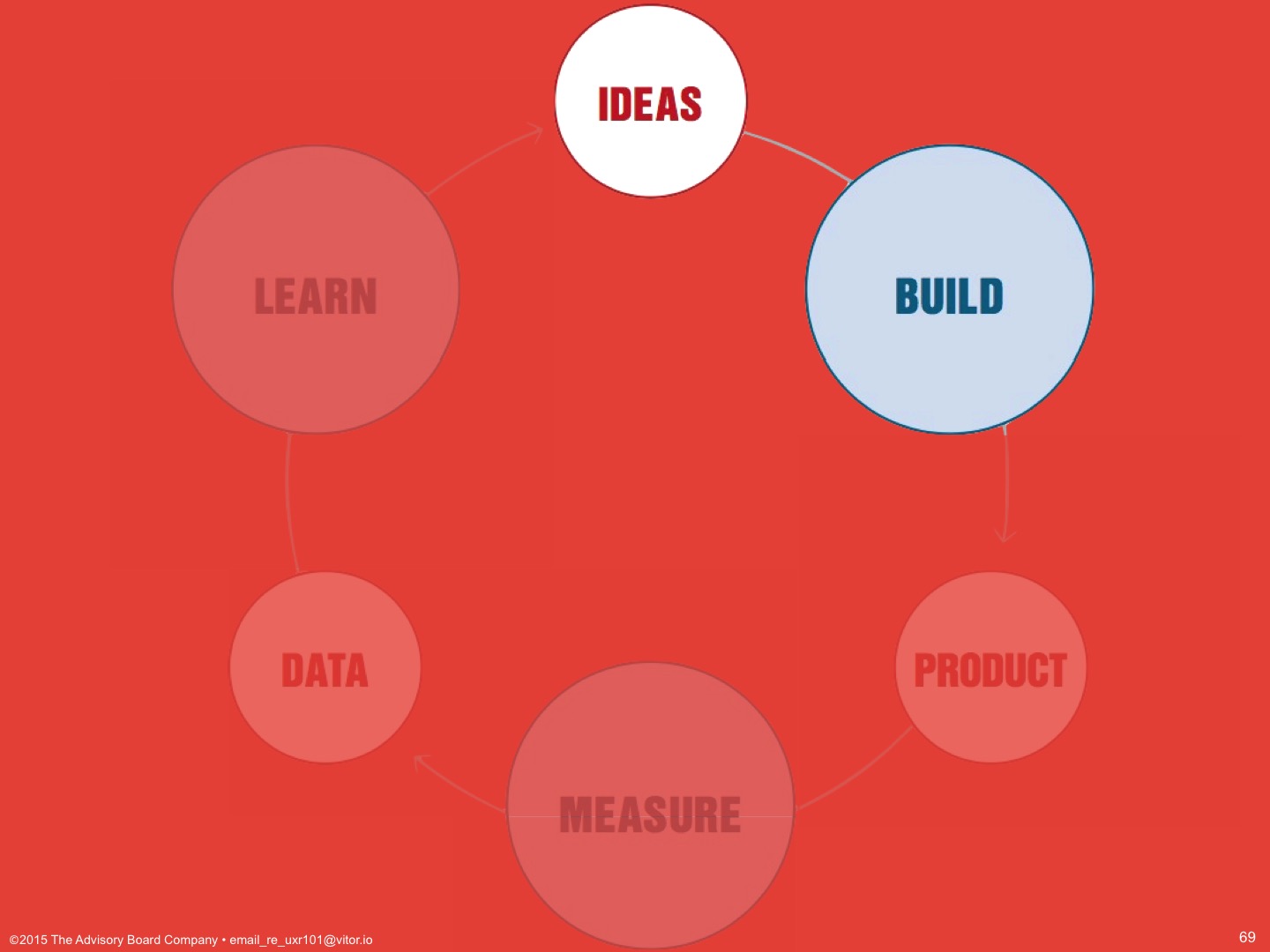

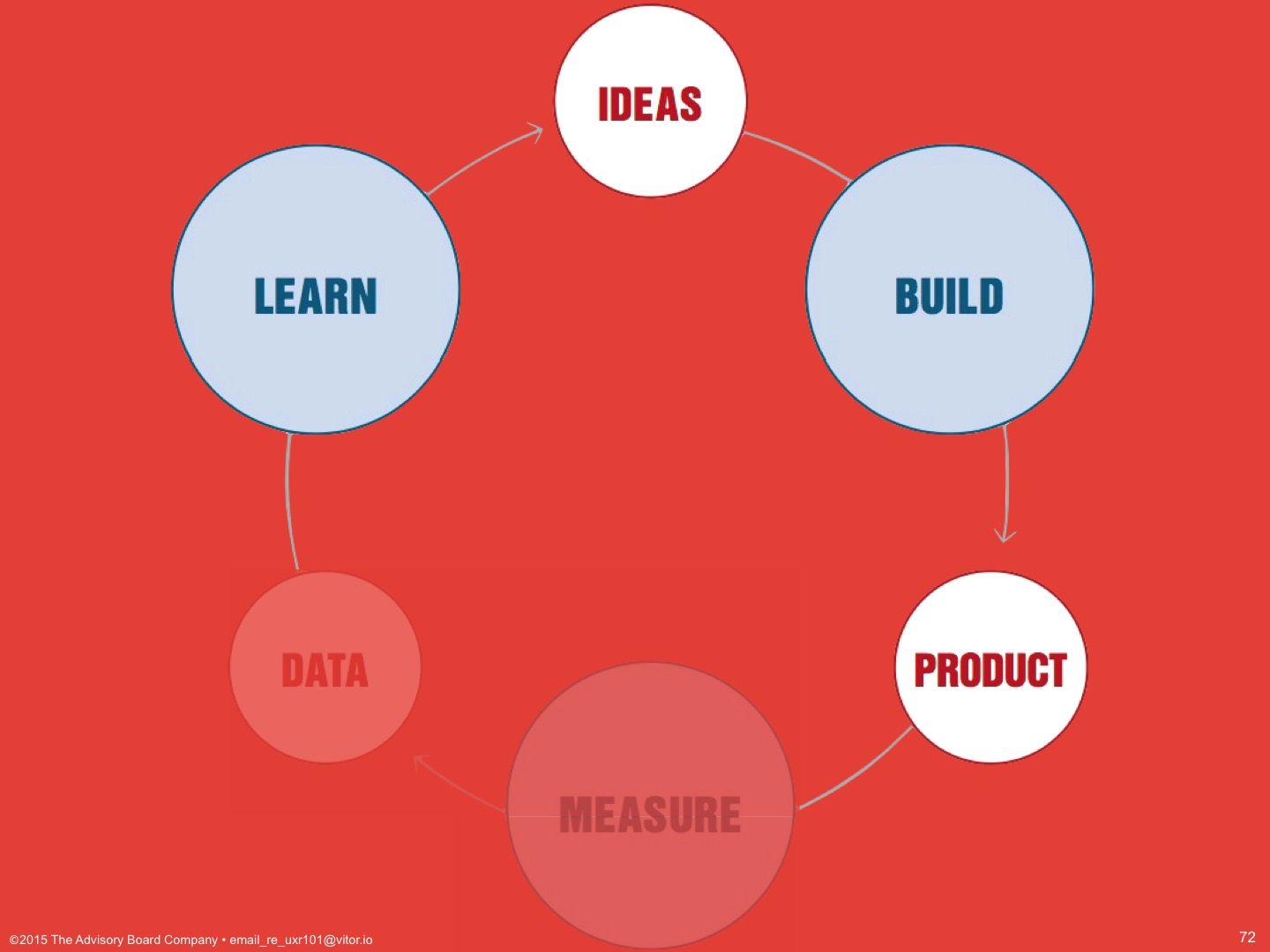

Here’s Eric Ries’ Lean Startup feedback loop from his book The Lean Startup, which people talk about a lot here. This feedback loop is first used to figure out what kind of product to build and then what features to build within that product, over and over, forever.

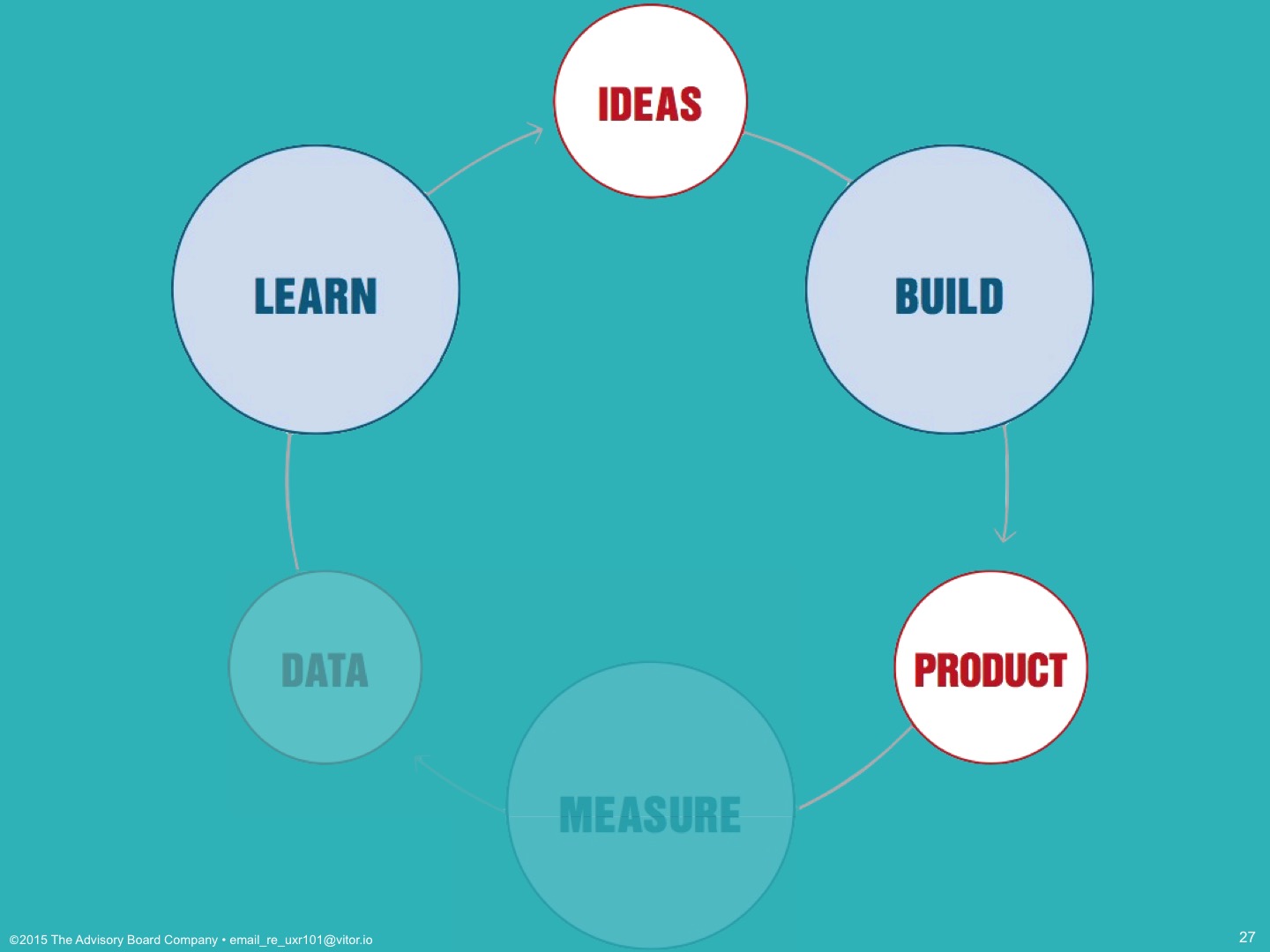

¶ 27

¶ 27

User Research is a direct part of or can substantially inform two-thirds of this loop.

¶ 28

¶ 28

Here’s the six stages of Product Discovery, a design sprint process which I think most PMs should have participated in the training for by now.

¶ 29

¶ 29

You might remember them better by the deliverables you produced during the workshop.

¶ 30

¶ 30

And, it should be no surprise that User Research is a direct part of or can inform the entire process.

¶ 31

¶ 31

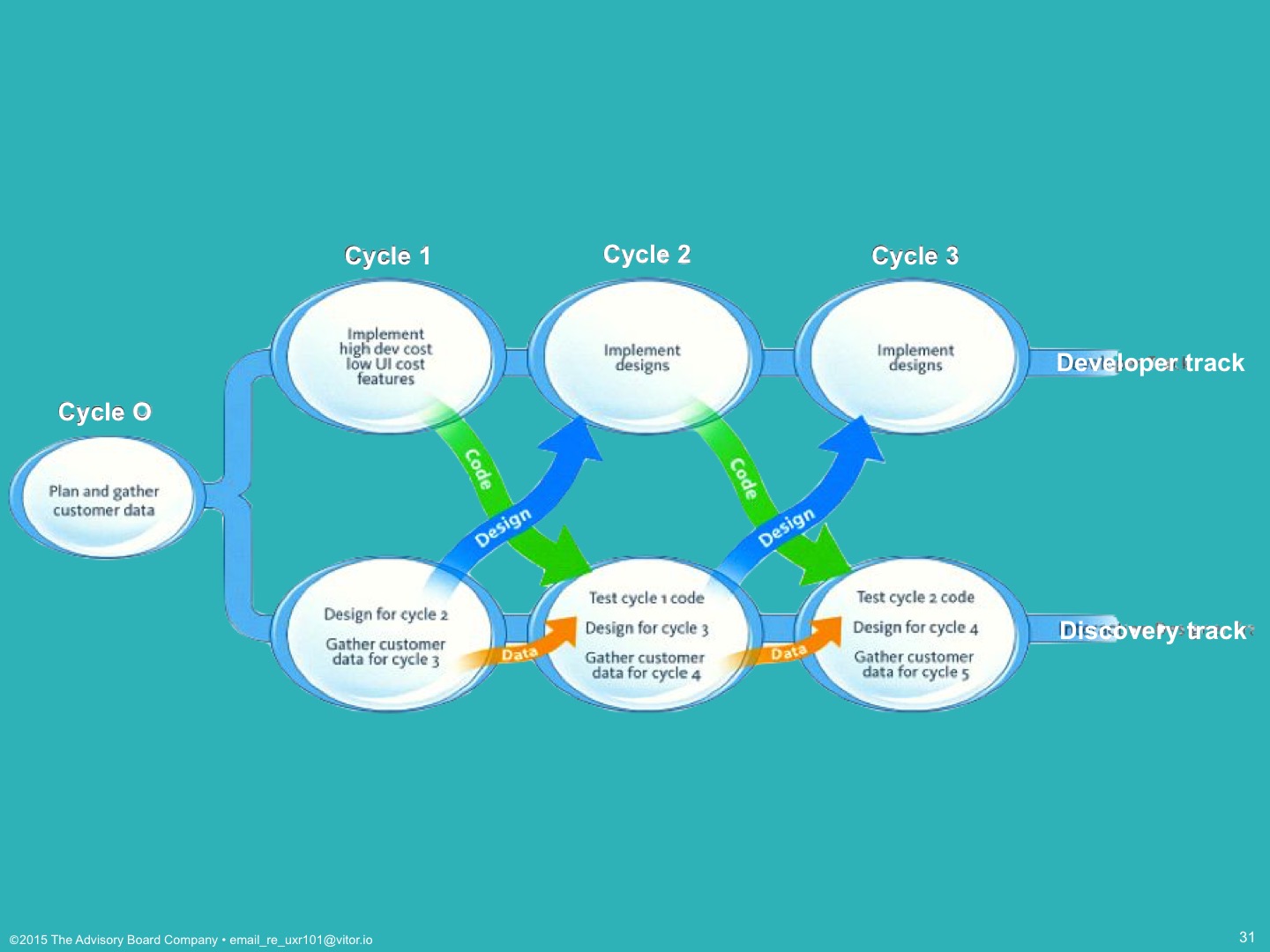

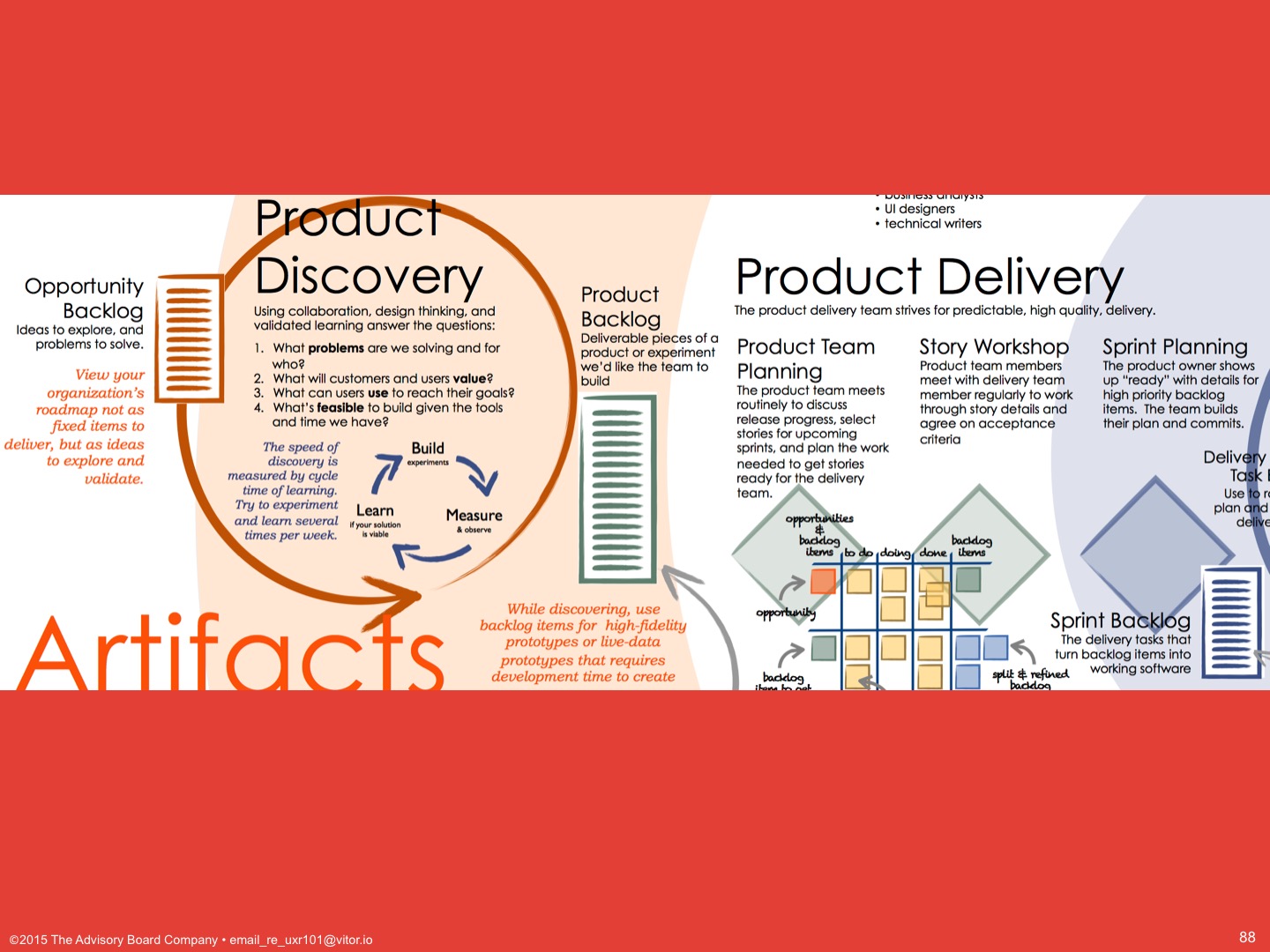

Finally, this is dual-track SCRUM. If you have UX people on your team and they’re working ahead of your developers, you’re probably doing some form of this already.

User research is literally one of the two tracks in dual-track SCRUM and the regular communication with development in their own track means User Research can be involved in the entire thing.

¶ 32

¶ 32

User Research has a place in all of these processes…

¶ 33

¶ 33

…which means User Research can fit into your processes, too.

¶ 34

¶ 34

I’m going to explain how: I’m going to tell you how User Research works and the value you see from it.

¶ 35

¶ 35

User Research fits in all of these processes.

It fits everywhere because we’re concerned with a broader context.

¶ 36

¶ 36

That broader context is your product’s entire context of use. A typical product team is concerned with the center ring, here: the computer screen and the stack that leads to that; the hardware, the database, the data, the application stack, the UI.

¶ 37

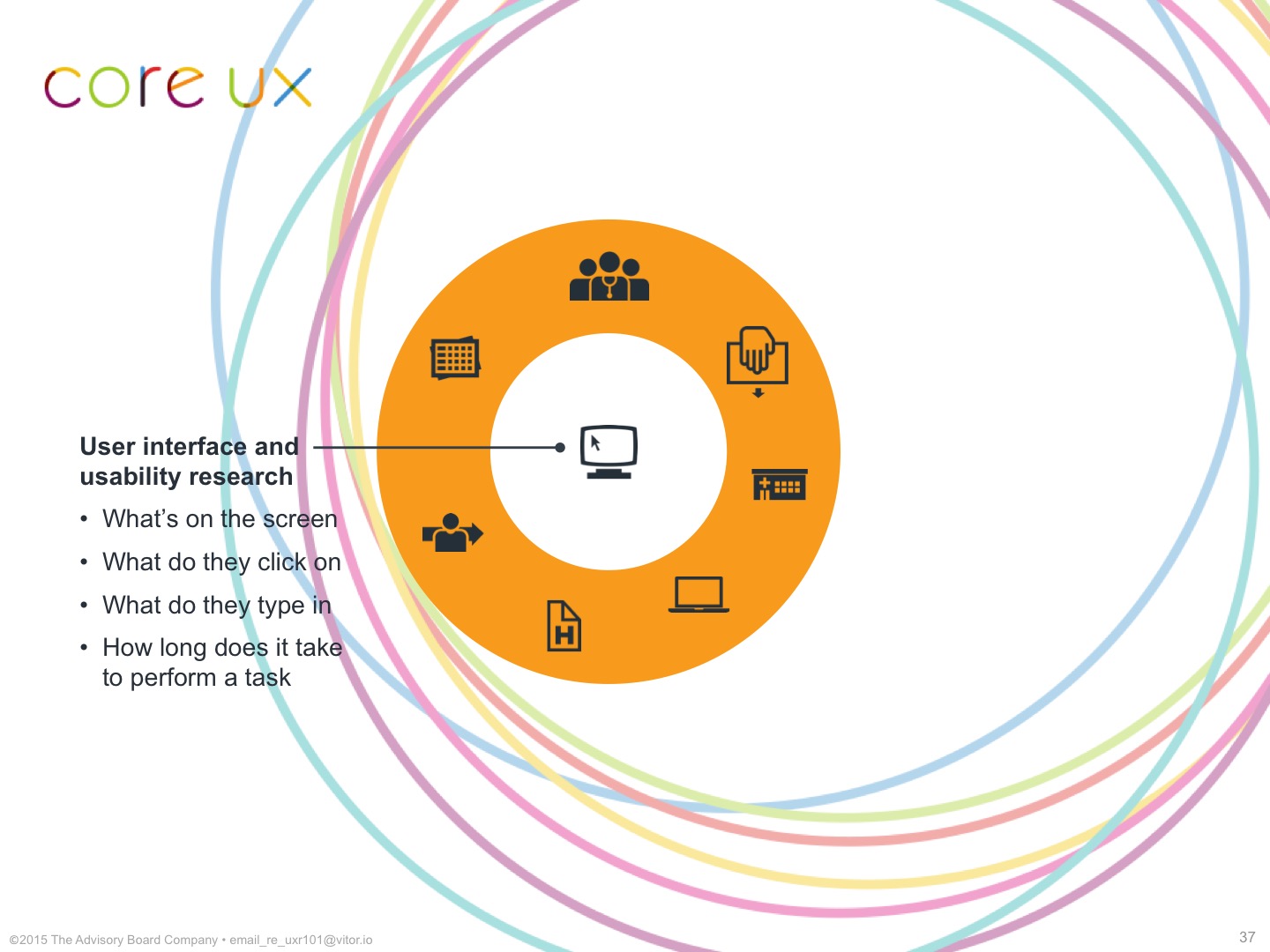

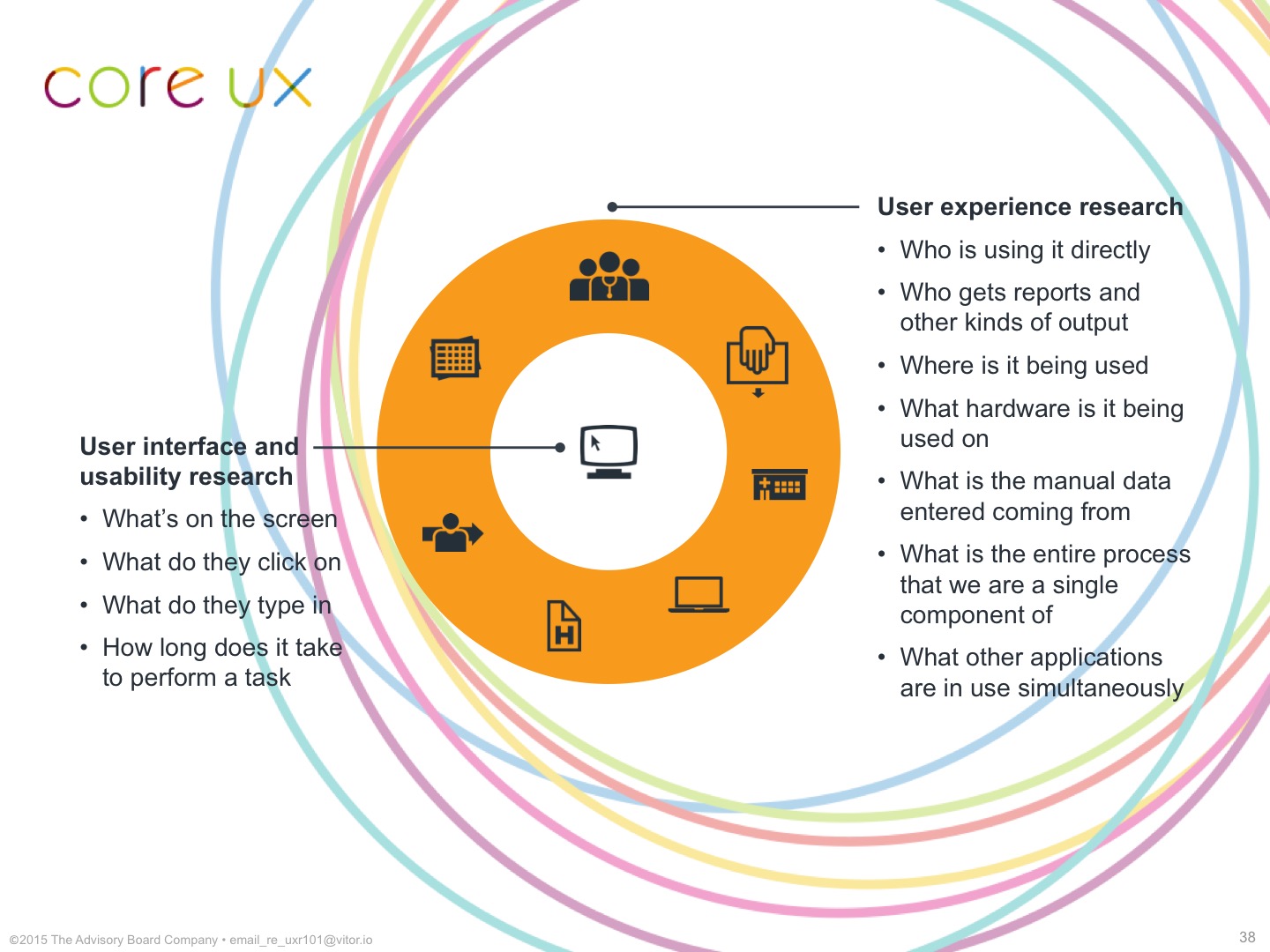

¶ 37

And, your interaction designers and your product strategists are concerned with product-specific work around what’s on the screen.

¶ 38

¶ 38

User Research concerns itself with everything else: not just what’s on the screen now, but who is sitting in front of it? And, who told them to do that work? What will happen with that report? How does that knowledge move through the organization and effect change?

¶ 39

¶ 39

We’re concerned with the broader context for two reasons.

First, because it’s our mandate.

¶ 40

¶ 40

Our mission is to ensure that Advisory builds the right products, not just Crimson. We serve The Advisory Board as a team that’s dedicated to analyzing and elevating end-user behaviors and their relationships with our products and technology as a whole.

¶ 41

¶ 41

And, we assist in solving the firm’s biggest problems through this broader context because it gives us widely-applicable answers.

A broader context is how we can do research for someone else’s product and use that to answer questions for your product, too.

¶ 42

¶ 42

We’re able to collect those answers because we work differently than other departments might:

- we look to make no assumptions;

- we use ethnographic methods;

- we ask questions differently; and, through those efforts

- we’re able to provide generative and validative value to your product.

Let’s look at each one of those in turn.

¶ 43

¶ 43

“Make no assumptions.”

Let me give you an example by way of contrast.

Product managers assume there’s a product to manage. Right? It’s in the job title. It’s a core assumption. And, development assumes there will be a database with correct data in it, for some value of correctness.

¶ 44

¶ 44

User Research asks, what happens when those things, those fundamental tenets, aren’t true?

And, teams have experienced these things already. The product goes down, and users fall back to past practices or tools, and now they have to reconcile that work after the software comes back up. Or, the user perceives the data in the product to be inaccurate, regardless of whether the math is correct, and so your product utilization plummets.

Having User Research understand the broader context of use makes integrating your product into those human processes easier, and understanding how users think and feel about their data, or its collection processes, or its attribution, means we can design and build reassurances about that provenance into the system from the beginning. And there are products where that’s been very successful.

¶ 45

¶ 45

When we go to understand that broader context of use, we use ethnographic methods to do it, and those are qualitative research techniques, and they’re collected with rigorous practices.

Qualitative research techniques come out of the social sciences. They’re flexible, iterative explorations like semi-structured interviews with open-ended questions, or shadowing, or diary studies. You’ll see elements of ethnographic research in history, and anthropology and even journalism, but I want to look at the rigor part specifically for a moment.

¶ 46

¶ 46

We’re all familiar with this quote, right?

“If I had asked my customers what they wanted, they would have said a faster horse.”

For sake of example, let’s say you were the product manager on this project, and you needed to make a decision. Which would you be more confident in?

¶ 47

¶ 47

“I’ve been hearing that faster horses could be the next big thing.”

versus

“Sixteen agricultural managers are quoted as seeking more efficient harness animals for purchase in the next twelve months to improve their throughput and production yields…

¶ 48

¶ 48

…and here are their names, highlighted transcripts and call recordings.”

I’m not suggesting that the former person is making anything up. But, rigor in research design and data collection gets you a lot of benefits besides confidence.

¶ 49

¶ 49

Rigor means someone else can look at your work and come to the same conclusions.

There’s no work to fact-check for the guy who “heard things.” It’s hearsay. It’s an anecdote. It’s no better than an opinion.

When someone else can look at your work, it means you no longer need to argue with them. They can look at what you did, see what you saw, and come around to your side.

¶ 50

¶ 50

Rigor means your decisions can be unimpeachable.

If you’re coming to the table with data, and that data means everyone’s going to come around to the same conclusion you did, it means they have to argue about something else, some other way.

¶ 51

¶ 51

Rigor means you can delegate more work, more readily, because it’s all written down and not solely in someone’s head.

What do we need to learn? It’s written down in the research plan. Which means you can hand that off to someone to figure out and come back. And, then, once the research is done, you can also hand off the work of doing something about it, because you can be confident they will see what you saw, and come up with a solution that solves the problems you saw.

¶ 52

¶ 52

And, when other teams are working in a rigorous fashion as well, it means you can reuse work they’ve done, because they’ve written it all down too.

There’s no reason for one team to duplicate another’s effort, and there are no gatekeepers blocking any User Research output.

¶ 53

¶ 53

We ask questions differently than other departments when we do our research.

We ask open-ended questions that are grounded in a person’s actual practices. We do this because people think about things in terms of their current state, because they’ve habituated to them. They’re used to doing things a certain way.

¶ 54

¶ 54

To them, the most obvious solution is always to make the current thing, their current processes, more efficient.

We ask questions differently to get them out of that mode of thinking, but without going so far as to have them prescribe solutions to us. We focus on discovering the underlying problems, the root cause of the symptom that your question comes from.

¶ 55

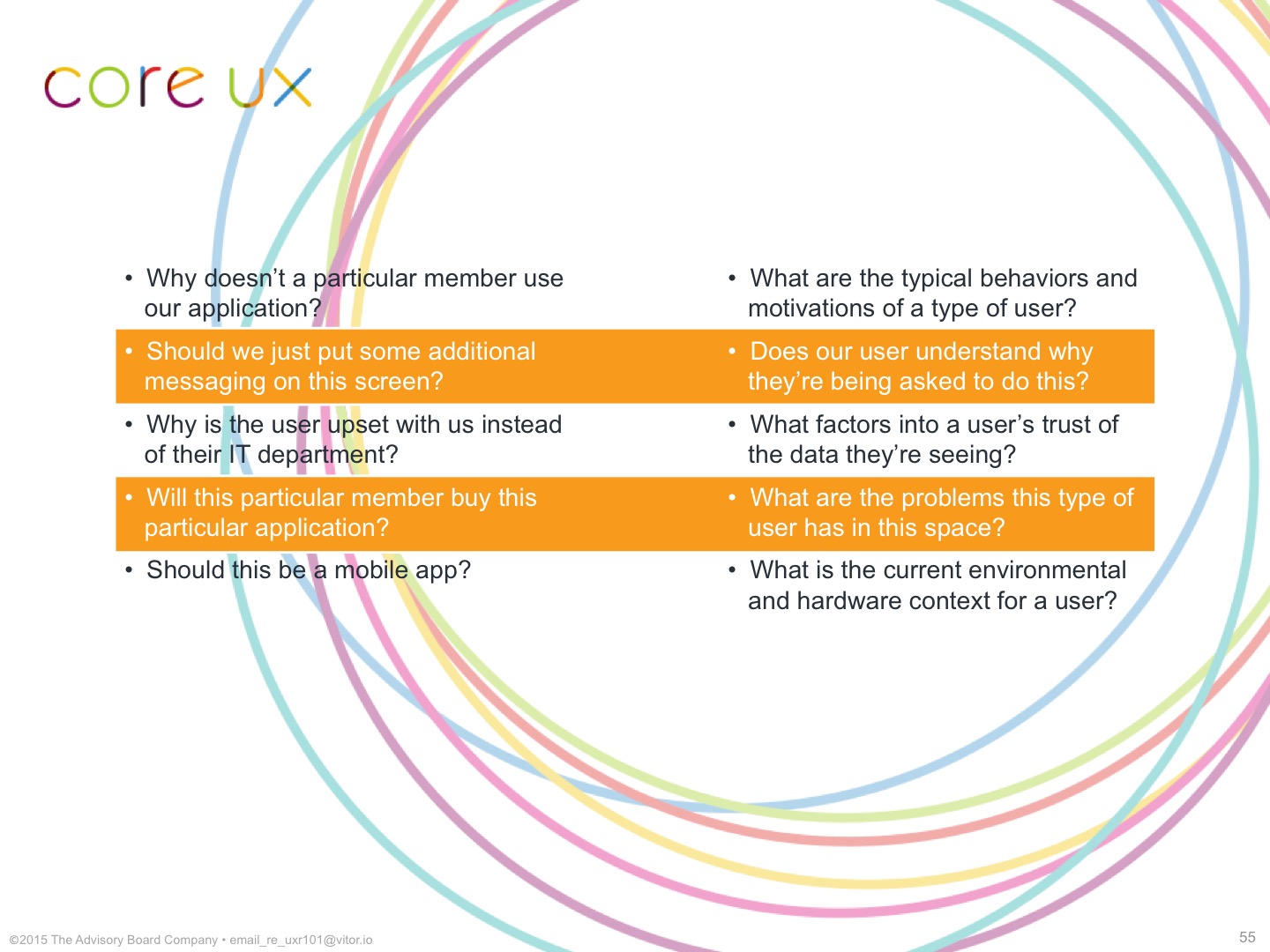

¶ 55

The kinds of questions we’re normally asked to answer are on the left and the deeper, core, problem-seeking questions we investigate are on the right.

We still get you your answer, but in this way we can often also answer subsequent questions, as well as similar questions from other teams.

¶ 56

¶ 56

And, just to tie off this quote with a little more rigor: it’s not a Henry Ford quote.

The last part of how we work is putting all of this together to provide generative and validative value.

¶ 57

¶ 57

Generative value means we’re coming up with original ideas, the big insights, the “wow,” the “blam,” setting the course.

¶ 58

¶ 58

Validative value means we’re confirming, or sometimes rejecting, a particular concept or point or feature, settling an argument, providing a correction or a nudge to keep you more on target.

Because the impact of each type of research can vary, when you come to us with a question, we’ll recommend different scopes of work depending on where you are in your development cycle and how amenable you are to change.

¶ 59

¶ 59

Let me give you a couple of examples.

A new product development team wanted to come up with new product ideas to test. There’s a lot of value in having different, competing ideas to test at that early stage of the process. No-one’s built anything, so there’s very little opportunity-cost in throwing one of them away if it doesn’t perform well in testing. This is what we mean when generative research can be course-setting, and have a big impact at the right part of the process.

In another example, another product had already launched to alpha customers when I interviewed the subject-matter experts on the product and reviewed the feedback that had already been collected. Instead of recommending redesigning small portions of it, or discovering the “one feature” that was missing, we came up with two or three similar, related, but ultimately different products that that end-user might use, but there wasn’t really a path to there from where the product currently was. Now, this didn’t kill the product — its sunset was already in the works — but it was another data point in support of the sunset.

If you’re looking for a big “wow,” for a “blam,” for a huge breakthrough, the best time for that is when you can safely stop and start over if necessary, and that usually means early.

¶ 60

¶ 60

Validative research is the smaller, bite-size sort of insight. If you’ve ever tested a wireframe or a mockup before you built it, that’s validative research.

One particular product team is a good example, here. They had us review their app and their interpretation of the research they used. Now, at the time, there was a big push to focus on a large group of potential users. But, for their particular use case, we felt the research was actually saying a particular subgroup should be the target. Now, of course, had they kept focusing on that larger user group, they would have gotten the subgroup as part of it. But, by having us come in and double-check their work, we gave them a nudge in a direction that we think is going to result in a product that is more likely to be used by its narrower, more specialized audience.

¶ 61

¶ 61

I want to give you some examples of integrating User Research into existing processes.

¶ 62

¶ 62

This is the typical launch roadmap for new products. We saw this slide earlier and we talked about how all of the bolded items are places where User Research can help.

(In the actual talk, I gave product-specific examples/team-specific examples for each one of these, where User Research can or has previously helped these parts of the launch process. Because they were all internal examples, I can’t share them here, but one thing that I can share is the “crossing the chasm” example.)

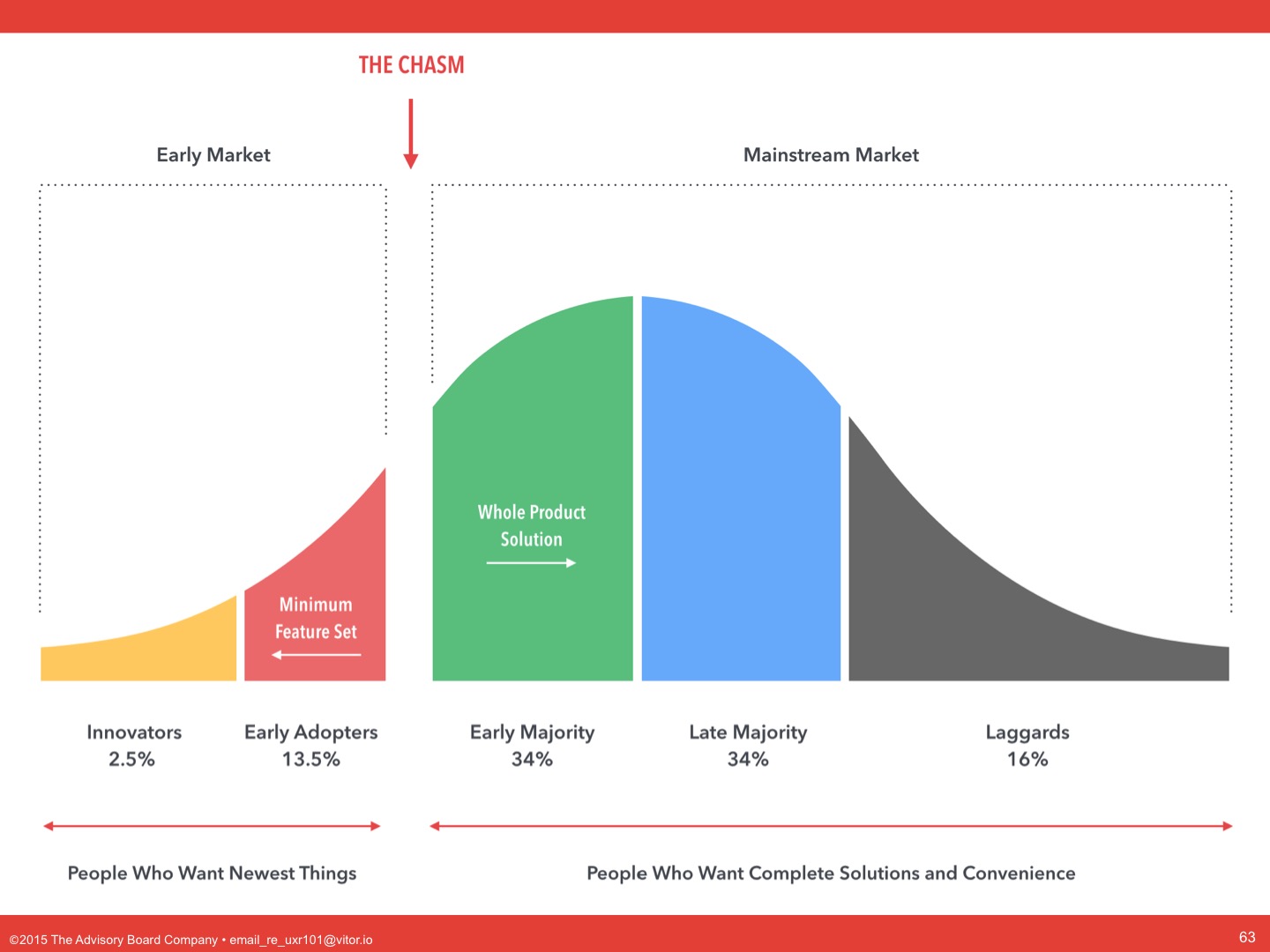

After you’ve hit production launch, how do you manage the feedback that you’re getting from these new mass-market customers that you might not heard from your early adopters?

¶ 63

¶ 63

User Research can help you manage the feedback you receive from these non-early adopters, and help prioritize your backlog, so you can be more confident about your roadmap decisions.

And, managing the backlog is important. When you look at analyses of agile teams and how much of their backlog they actually develop, you see that teams are developing less than 25% of their backlog. They’re throwing out most of those “features” or “requests” as being irrelevant to the end-user. I’m not misinterpreting the agile goal of building 20% of the features that support 80% of the users or use cases. I’m saying: highly productive agile teams throw away more than three-quarters of their backlog as irrelevant, due to research. Their backlog is not a to-do list.

There are longitudinal studies that say that 45% of developed features are never used, and 65% are rarely used. Nearly half the features you spent the time to build in your application, that someone asked for, were never touched by that person. That’s what User Research can tell you, how we can help you throw out the nearly two-thirds of features that most people won’t ever look at.

¶ 64

¶ 64

Okay, so, this is the Lean Startup feedback loop.

Lean Startup redefines the concept of a startup — or any project to create a new product — to be a cycle in which a group of people learn how to build a sustainable business. That is, you’re not coming up with a product idea, building it, and either selling it or sunsetting it, in that order. You’re coming up with a product idea and testing it, coming up with a sales plan and testing it, coming up with a single feature and testing it, hypotheses and tests, over and over, forever. Every failed test means stopping and trying something else, over and over, forever.

Development is only a small part of that. This cycle is meant to continue through past figuring it all out and launching the product. It’s supposed to continue for every feature, every idea, for the lifetime of the product.

¶ 65

¶ 65

One of the opening examples in the book is how Intuit changed development on TurboTax from one big feature per tax season to five hundred changes in that same two-and-a-half month span, and how it entirely changed how they think about building the product.

When everything is put inside that loop, there are no more arguments about where to spend your time. No more one-off or pet features for particular members or executives. No more roadmap. Just tests.

“Hey, what about…”

Just test it.

“I think we should…”

Just test it.

“Wouldn’t it be neat if…”

Just test it.

Test succeeds, you develop it. Test fails, you don’t. I mean, we just heard that 45% of released features are never used. Someone asked for a feature, someone put it in a backlog, someone developed it, someone released it to general availability, and no-one used it.

In a Build-Measure-Learn loop — and, again, “build” here means build a test, not develop the entire thing — that never happens, because you don’t develop anything unless you built the test, you measured the outcome, and you learned that it was not worth doing, or you learned enough to build a little more and test it further.

¶ 66

¶ 66

In Lean Startup, ideas are more than just concepts: they’re testable hypotheses.

This is the first step in how new product development teams traditionally test. (I can’t show you the slide here because it’s an actual slide from one of our new product development teams, but imagine there’s a slide here.) There’s a detailed conceptual solution that they’ve come up with internally, and an executive — a buyer — is asked to grade it.

If the new product development team considers it to be a success, the presentation gets more detailed from here, with features and high-fidelity mockups or prototypes in subsequent tests.

In Lean Startup, product testing starts from a much narrower presentation of the concept, and asks different kinds of questions, in the form of testable hypotheses. Ideas in Lean Startup are broken down into a “value hypothesis” and a “growth hypothesis.”

¶ 67

¶ 67

The value hypothesis tests whether a product or service really delivers value to the customers once they are using it.

User Research can provide the discovery process that explains the customer’s problems and their current processes. This may include mental models, so you can see if your idea fits into the customer’s way of thinking, and process models, to see what parts of their work you’re making better — or risking making worse.

¶ 68

¶ 68

The growth hypothesis tests how new customers will discover a product or service.

For a new product, it’s easy to assume our growth hypothesis is “Sales will sell it.” That’s not the whole story. For a new product, your growth hypothesis is dependent on where you are in the technology adoption lifecycle, so your growth hypothesis has to include: who you need to be selling it to, and why, with the understanding that a feature that might make sense for an early adopter might also have to be redesigned or replaced entirely for the mass-market.

For a new feature in an existing product, your growth hypothesis has to include how customers will discover it, learn how to use it, and evangelize it. A feature that provides value, but no-one can find, fails its growth hypothesis. A feature that provides value, but users won’t tell each other about or provide testimonials for, fails its growth hypothesis.

The same user research that informs the value hypothesis can also inform your growth hypothesis. Understanding the environmental and organizational personas can tell you where each potential customer is in their own technology adoption lifecycle. Understanding flows and processes in organizations can tell you how coworkers communicate with each other and learn.

¶ 69

¶ 69

As we discussed earlier, “build” in Lean Startup doesn’t mean software development.

It means building whatever “enables a full turn of the Build-Measure-Learn loop with a minimum amount of effort and the least amount of development time.” (That’s the Lean Startup definition of MVP, by the way.)

And, the best way to take the least amount of development time is to take no developer time, and build and test storyboards, mockups, and interactive prototypes, informed by the user research from the “ideas” portion of the cycle.

(In the actual presentation, I showed a series of storyboards, mockups, and interactive prototypes from a variety of successful and unsuccessful internal tests and products.

¶ 70

¶ 70

In Lean Startup, the “product” is the experiment. And, the experiment is your hypotheses: whatever it is you’re testing and how you’re going to test it.

Now, User Research (as a department) may not actually conduct these tests for you. You have interaction designers and product strategists for product-specific work. But, User Research can absolutely help you design the test, train you and your team to conduct them well, and review your findings with you.

¶ 71

¶ 71

Now, for “measure” and “data,” User Research doesn’t have so much to do with this part.

This is the PM and Dev leadership decision-making part. Maybe we helped you create your hypotheses, and the data should obviously indicate whether you confirmed your hypotheses or not, but you have to make the call as to whether you move forward or test something else.

I will say that, like we talked about User Research asking different kinds of questions earlier, the way you pose your hypotheses and the measures you test it with make or break your ability to make good decisions. Where a new product development team might have some latitude or discretion about considering a testing deck successful or not, Lean Startup is very specific about the types of measures you should be dealing with here, and what counts as a pass or a fail.

¶ 72

¶ 72

“Learn” is where you come back to User Research.

This is what we talked about earlier, where we can collect your hypotheses, and your tests, and your results, and organize and preserve them on an ongoing basis. You should never have to run the same test twice, unless it’s to measure different things or your hypotheses have changed. Even if the entire product team has turned over, the work you did should persevere. We can help make sure your work continues to be referenced, used and appreciated.

¶ 73

¶ 73

This is Product Discovery.

Product Discovery is a design sprint process taught by Jeff Patton and discussed in his book, User Story Mapping. This is the slide we saw earlier, where it maps the stages of the Product Discovery process to the deliverables, and at this point in time, every product manager in the company had gone through a workshop where they were trained in how to conduct Product Discovery design sprints, in order to help them use this process on their own products.

Again, we’re going to go through each one of these and look at how User Research can inform each stage of this process.

¶ 74

¶ 74

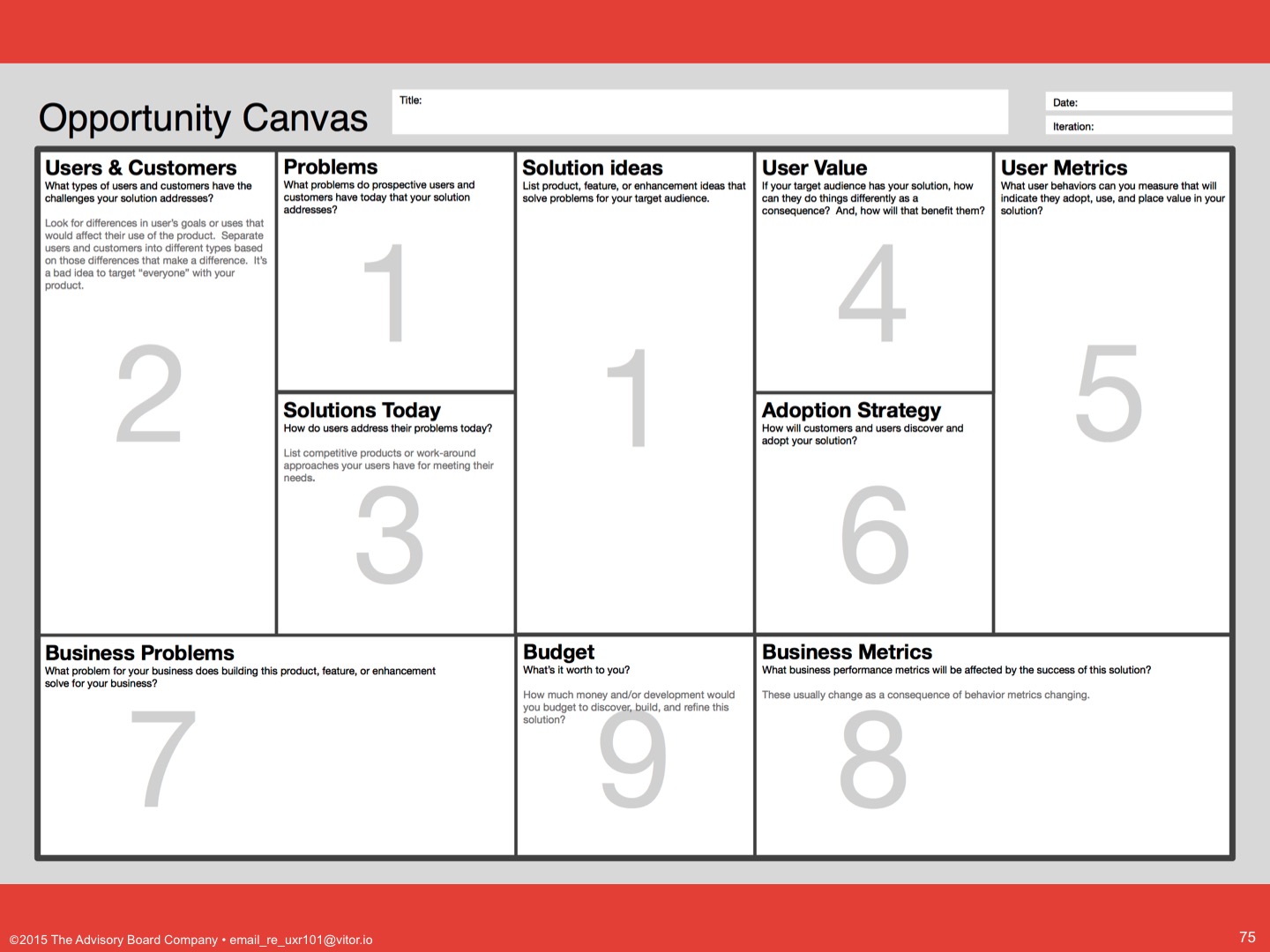

We start with “frame” and the Opportunity Canvas, which looks like this:

¶ 75

¶ 75

It has ten categories to fill in to help you articulate the problem you’re trying to solve and the solution you’re going to try and solve it with.

Seven of these ten categories can be informed by User Research. Your “Users & Customers” could be driven by personas. Their “Problems” and “Solutions” could be informed by in-depth interviews with them. “Solution ideas” could come from workshops facilitated by User Research. “User Value” and “Adoption Strategy” you might remember from Lean Startup’s value and growth hypotheses, both also informed by User Research, as with “User Metrics.”

¶ 76

¶ 76

In Product Discovery training, your workshop group next created a composite story map for your user, that you then had to validate as part of user interviews.

(Here, I would have illustrated examples of actual story maps collected from the workshops that were conducted.)

¶ 77

¶ 77

And, then, everyone remembers setting up research calls with a handful of end-users, and how good you felt when you realized you got your idea at least half right, and how bad you felt for the teams that had to throw everything away and start over.

This is called User Research because it’s user research. Figuring out the questions to ask and how to conduct the calls is what we do, and we can help you do it during Product Discovery, too.

¶ 78

¶ 78

So, by the third day, you have enough information to really refine your product or feature, get really specific about your users and their needs and your solution.

You’re discarding invalidated concepts, you’re updating your opportunity canvas and your personas with what you learned from the interviews, and User Research can help you make sure you’re getting all you can out of that work.

¶ 79

¶ 79

And, then, it’s time to figure out what, exactly, to test and potentially build.

This is the design studio sketching exercise. Everyone doodling their ideas, presenting them and improving them based on seeing everyone else’s concepts.

This is generally product-specific work, and your interaction designers and product strategists should be doing this with you, but User Research may be able to help you facilitate this stage allowing everyone to participate.

¶ 80

¶ 80

After the design studio, testing your sketches, mockups or prototypes with users, again, is product-specific work, but as we’ve mentioned before, User Research can help you plan the test and train you to conduct them well.

¶ 81

¶ 81

Finally, at the end of your testing calls, at the end of the week, you either have validation, or you learned that your idea isn’t going to cut it, and you just saved yourself six weeks to six months or more of development time.

Now, our training sessions stopped there, but if you look at the full sets of documentation from Jeff Patton and Co-makers, it continued into how to do agile and how to do SCRUM, and we’re going to look at a particular version of that next.

¶ 82

¶ 82

Dual-track SCRUM first showed up in the literature in a paper coming out of Autodesk in 2006, advocating for an independent discovery and design track that fed the development track, based on their own studies of how their teams worked.

Like we’ve talked about in other processes, this had the benefit of making it so investigations were conducted throughout the product lifecycle, not just at the beginning or end of feature development. It also meant that the designers and researchers were able to affect the requirements for a given feature, not just the design for it.

¶ 83

¶ 83

It looked a bit like this again…

¶ 84

¶ 84

…where the inner ring is your development team focused on delivering the product…

¶ 85

¶ 85

…and the outer ring is your discovery and design team focused on figuring out what your development team’s supposed to be doing.

In fact, modern renderings of a dual-track SCRUM look just like this:

¶ 86

¶ 86

When you add in a discovery track, this becomes a larger, outer loop:

¶ 87

¶ 87

When Product Discovery or another design sprint-type process is your outer track…

¶ 88

¶ 88

…then User Research can conduct research to inform the discovery track’s work, and work with your interaction designers and product strategists to design their discovery cycles, and help plan their validation strategies.

And, that’s dual-track SCRUM, which means that’s the last thing I said I would talk with you about today.

¶ 89

¶ 89

We talked about how User Research knows product management is hard.

¶ 90

¶ 90

We talked about how User Research can take on some of that work.

¶ 91

¶ 91

I showed you how User Research works and the value you see from it.

¶ 92

¶ 92

Then, I gave you examples of User Research integrating into existing processes.

¶ 93

¶ 93

I briefly mentioned earlier that there are no gatekeepers to any User Research outputs, and we wanted to make it clear that our department runs a little differently than others you might have needed help from.

Instead of needing to find out who in a department that you might know, or get an introduction, or ask someone for a favor, we’re providing a single entry point for all User Research questions, requests, presentations, training, anything. It’s this email address. We look forward to hearing from you and to working with you.

¶ 94

¶ 94

(Now, this is the callback to the original slides that Erin Howard introduced the talk with.)

These are the workstreams UXR attends to:

- original, structured, new research;

- synthesis and analysis of both new work and of existing work;

- user relationship management, whether you have users or just want them;

- knowledge management and transfer; and

- product team training.

¶ 95

¶ 95

Let’s reframe those as examples.

New research might be creating fresh personas and mental models for users.

Synthesis and analysis of existing work might be going through retention call notes with a fine-toothed comb.

Product team training isn’t just education on your work. It’s also helping you improve your practices: adding that rigor into all the things you do.

User relationship management is both helping you track and not impose on your existing users, but also helping you design ways to find new users to talk with.

Knowledge management and transfer is us helping you put all your work in one place so you never duplicate effort.

¶ 96

¶ 96

Finally, these are still early days for us. We don’t have tens of thousands of end-users in a database yet.

We look forward to working with you all to get there, but until then, if you want big insights, bring us in early: day 0.

If you don’t have end-user relationships, and your users aren’t consumers, then you’re going to need to budget time, money or both to find them.

But, once you do have those relationships in place, or if we’re working with existing research or secondary sources, then the turnaround can be much quicker.

¶ 97

¶ 97

Again, there are four things you can do, starting today:

- you can plan for the incorporation of User Research into your timelines;

- you can establish user information exchanges between internal resources that already regularly dialogue with end-users;

- you can formalize a plan to regularly collect application metrics about your users; and

- you can record and archive all of your calls and interactions with your end-users.

¶ 98

¶ 98

Here are the sources…

¶ 99

¶ 99

…I used in the talk.

¶ 100

¶ 100

Are there any questions?

Also, please start filling out your feedback forms.

¶ 101

¶ 101