Design Sprint Experience in the Enterprise

(This is a lightly edited copy-and-paste of my response to a question in the Designer Hangout Slack in September 2015. I write "we" throughout because these were common organizational practices, or because I was facilitating a team toward a shared understanding, but in this particular sprint I was the sole facilitator. I routinely run design sprints and facilitate other studio-style workshops as part of my practice.)

Hey folks. Interested in hearing about your experiences with running design sprints in an enterprise. I'm running my first one in a few weeks. I've run small brainstorming sessions and small/quick design studios before, but never a multi-day full sprint with folks from corporate. This should be interesting! Any lessons learned you care to share?

I just ran one a couple weeks ago. Other teams do them internally, but this was my first time facilitating one with a team that had never done them.

I guess, what's the schedule you're following, and what's the experience/comfort/buy-in of the team, and what's your starting scope?

awesome! How did your sprint go? And we're literally in the very front of this. We've yet to decide who will participate and how long it will go. This much is sure: key decision makers from the business (product + strategy teams) have done a ton of research on customer segmentation/persona creation, they have a strong sense of the company's roadmap, and they are keen on building out a long-term multi-channel solution that champions the user

One exec voiced a concern that even though he'd be excited to be in the sprint, he didn't want his closeness to the project to be a bias on the sprint. So the team, it seems, has the best interest of the exercise in mind, imo.

It went well. We had Jeff Patton come in and run training workshops with all of our PMs last year, so organizationally everyone at least superficially understands that it's a legit process that they should pay attention to. I guess I'll just brain dump some things from what we've learned over time.

First, Jeff Patton did our training, and his book, User Story Mapping, talks about his particular design sprint process, Product Discovery, starting in chapter 14 on.

There are other variations, but this is one I used, because this is the one all the PMs I have to work with were taught.

Product Discovery frames up a sprint in five days + agile planning: Frame, Empathize, Focus, Ideate, and Test. In practice for us, that maps to the deliverables of opportunity canvas, personas and story maps, user research, design studio, and user testing.

In Product Discovery training, we all did little fake tiny projects, so understanding the scope of what fits the first time you do it was tricky. Other teams here quickly learned that working on a single feature for a single persona best fit into the "one week" framework. The sprint I facilitated was a little different, because the team had never done one before, and hadn't really had a designer ever, either. So we started with the entire product, with the hope that we would narrow the scope as we went.

The recommended sprint team is pretty standard here: a front-end developer, a back-end developer, a PM, a UX person (separate from the facilitator), and sometimes 1-2 other people from other roles if appropriate, like Sales, or Support, or a Business Analyst or something. That core team works together full-time for the entire sprint, and then just for design studio, we open it up to a broader range of stakeholders and interested parties, like additional developers, or support. In this case, someone from our Infosec team and our customer support team joined in.

Day 1 starts with a quick intro to UX and research and the sprint process, with anecdotes about other teams, and answering typical questions like "can't we just copy a competitor's product design (no because they haven't done any design or research either)" and "isn't playing with post-its just farting around (no you think differently this way)" and "isn't a UML diagram or state machine flowchart better than a story map (no because they're not the linear user experience)" and "why can't we just write all this down in Confluence (not even Atlassian plans their products that way)" etc.

We've all had training so we don't have to do a lot of education, but if you have stakeholders who have no idea what they're about to get themselves into, you might want to spend more than 15 minutes on it. In Jeff Patton's workshops, at least the first hour of each day, and then some time throughout, was presentation on how and why, with lots of videos and examples.

And then we do a story mapping exercise to warm everyone up, where everyone independently story maps their morning routines, and then puts them together, and categorizes them, marks highlights and pain points, etc.

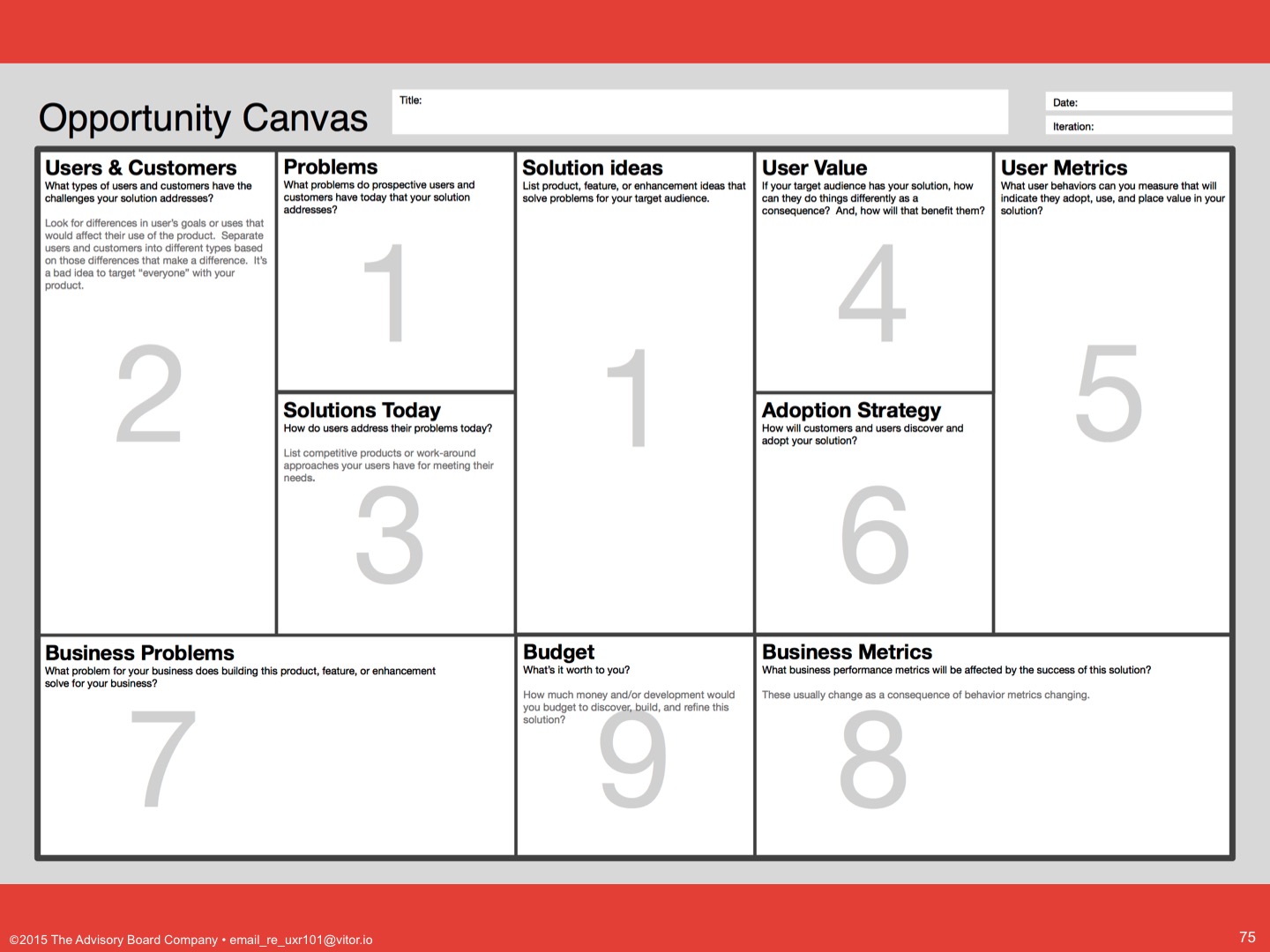

And then we spent the entire rest of the day working through an opportunity canvas for the entire product, which established what they believed were their personas, and value, and pain points they were solving for, etc.

We had our first epiphany then, when someone realized that everything really was an assumption, and they had never validated a core feature was actually, genuinely needed by anyone. So that was nice.

We also tried out the Javelin Experiment Board as an alternative, but the team felt it wasn't high-level enough for this early stage in the sprint.

Day 2 was personas and story maps, based on the personas that were specified in the opportunity canvas the day before. These are very lightweight. We had five personas, each with "About" (demographics, environment, relevant personal details), "Context" (tasks, workflows, responsibilities), and "Implications" (what these things mean for features they have to use) categories.

All five were developed, and it came about that maybe there needed to be a sixth.

This the part where I started narrowing scope, because even with heavy timeboxing, we were still completely blowing the schedule. So we flagged pain points or internal points of ignorance in each of the personas, and then everyone got dots to vote on prioritizing the importance of each persona, including the undeveloped sixth one.

So that got us a list of things to parking lot, and one persona and its pain points to focus on, and then we story mapped those.

There were four story maps at the end of the day, and we finished by prioritizing two of them, and coming up with the questions to ask to validate them in the user interviews the next day.

Day 3 was user interviews. We had three user interviews scheduled, and went through the interview script with them, and then after lunch, we put up the opportunity canvas, persona, and story maps to be refined.

The team chose to focus on a single story map and blow it out into multiple, detailed maps, based on what they had heard. That was six additional story maps, and again I took this time to force them to prioritize.

They picked two, one as the highest priority and a second one if they finished that one early.

Day 4 was design studio. We had a UX come in remote from another office, plus someone from infosec and someone from customer support. Again, this team was tech-heavy, so for this, I emphasized coming up with a solution that they could test, which meant I skipped some of the more creative facilitation I might have done with a roomful of designers.

That meant coming up with examples of similar types of workflows, either in context or in organization, from outside of our products. Examples from Google apps, Facebook, banking, OSes, and others were listed, and then they drew out all of those workflows.

Then they independently drew out a new, pie-in-the-sky workflow based on all of those examples. Then they presented all of those, and iterated based on each other's designs a couple more times. Then they validated internally whether it could actually be built, and they decided to focus on producing high-fidelity comps to test the next day. Without a designer on the team, that meant changing the live app in Chrome Developer Tools and taking screenshots of it, for the most part.

I think those were then loaded into Balsamiq to make a clickable PDF, and that's what was tested Day 5 on the user testing calls.

Oh, and at some point in there I made them design metrics to measure what their current baseline for that feature was, and ones for post-launch, so they'd be sure that it solved the pain point better.

It closed with story mapping their current feature production and release process, and marking pain points, and also places where new processes would have to be added to e.g. loop in training and documentation teams, that they had never done before.

I don't actually know if the feature is scheduled to be built and go into production yet, but that's the sprint.

They ended up with that, plus six other flows they could do half-sprints for (since they had already partially validated them), plus two additional flows and five other personas they could focus on for additional full sprints.

Lots of potential for good, future work.

It's great that your group was primed by the Patton workshop. I've sat through one myself (though it wasn't done by Patton himself), but none of others in the group will have gone through something like this, I think

thanks so much for that!