Thinking with things, designing with sensors, prototyping the Internet of Things, no programming needed (2018 edition)

By request, I'm revisiting how designers can conceptualize, ideate, and prototype physical hardware devices, six years after my iPhone + ifttt = IoT wifi sensor platform talk, "Experimenting with the Internet of Things" workshop, and Distance essay.

Prior efforts

In 2011, I backed a Kickstarter for a new company and a new connected sensor product called Twine. You put a battery in it, maybe plugged an extra sensor into it (by default, temperature, vibration, orientation; moisture, magnetic switch, and custom were extra), connected it to wifi, and it could notify you over text, Twitter, or email.

It was one of three Kickstarters launched around the same time, the other two being Ninja Blocks and Node. Each made different tradeoffs in their product.

If you didn't want to wait for Kickstarter that 50/50 might not succeed, but you knew at least a little bit of JavaScript, in April/May 2012 I presented a talk on turning an old iPhone into a connected sensor (here's a PDF of the slides, although you don't get the embedded videos, I'll have to dig up the original Keynote presentation).

If you didn't know at least a little bit of JavaScript, though, there wasn't much you could do with the Internet of Things or sensors at this time; everything required programming, electrical engineering, or both.

That short talk turned into a 45-minute talk and demo for developers, called "Experimenting with the Internet of Things." In it, I covered definitions, examples, technology options, and a new framework for conceptualizing and ideating with sensors and connectivity.

That framework became early drafts of my Distance essay, You and Your Designs, but didn't actually make it through the editing process. The Internet of Things was one of five domains called out as critical for designers to understand, but "how" was left as an exercise for the reader.

Finally, that work became a series of conference talk proposals, some in partnership with Stephen Anderson (who had his own framework), none of which were ever accepted.

Dead pool

It's worth discussing the pace of change, and the bets that individuals and companies made that did and didn't pay off.

😣 Supermechanical is still in business, but no longer produces or supports the Twine sensor or Spool cloud platform, focusing instead on connected thermometers.

😣 Node is still in business, but pivoted to focus on their color sensor.

💀 Ninja Blocks shut down after running out of money producing their Sphere sensor hub.

🤓 Electric Imp is still in business, and continues to build on the platform they started out with.

🤓 PhoneGap, used in the iOS IoT prototype, was acquired by Adobe, and released as an open source project. It is still actively developed.

💀 Mandrill, used in the iOS IoT prototype, is now an add-on for paid monthly MailChimp accounts, and is no longer available as a standalone service.

😣 IFTTT, used in the iOS IoT prototype, is still in business, but how they connect to applications, services, and sensors is very different now.

😣 Pachube, used in the Hot Potato prototype, was acquired by LogMeIn and renamed Cosm, then Xively, with the community-friendly functionality called Xively Personal. Pivoting to focus on commercial and enterprise applications, Xively Personal was discontinued, and Xively was acquired by Google.

💀 The Kickstarter for the Daisy WiFi sensor failed to achieve its funding goal.

😣 The Kickstarter for the Wovyn platform and sensors failed to achieve its funding goal, but the company appears to still be in business, despite an outdated website.

🤓 Skylanders went on to have five sequels, each with additional toys; six spin-off mobile games; two seasons of a cartoon series on Netflix; and more. Three competing lines of NFC toys and video games also came out.

💀 Necomimi, the mind-reading, animatronic cat ears, are no longer in production. The follow-up effort, Tailly, did not reach its Kickstarter funding goal.

Definitions

The definition for "Internet of Things" in my framework starts by throwing out the existing commercial and industrial definitions. By their nature, they put expectations, aspirations, and social, moral, or commercial lenses onto something that doesn't inherent have those things.

As designers, we need the simplest, most fundamental definition, one from which all of the other properties can emerge. That definition is:

The Internet of Things means that real-world objects are addressable and connected.

Additional sensors are even optional, because if you can't reach a device, that still tells you something.

Connectivity I class as "smart" or "dumb", like mainframes versus workstations. Either it can instantiate a connection on its own, or something else has to request a connection of it.

A ”smart” connection is one where the physical device -- whatever it may be, an iPad, a toy figure, a pair of cat ears -- has the ability to make a connection of its own. It doesn't need to be polled, it can push data elsewhere on its own accord.

A "dumb" connection, then, is one where the physical device cannot make a connection on its own. Another device has to poll it, has to request, maybe even has to power it.

Note that we're only discussing the connective ability of a uniquely addressable real-world object, here, not computing power. This is important, because nothing in our definition absolutely requires onboard smarts, or onboard power, or interfaces, or anything. Many of those things are emergent from our basic definition, but they are not required by it.

Exercises

let's take a minute and make a list of five things, five traditional, dumb, household objects we can augment and learn to interact with using this basic idea of a real-world object that is addressable and connected.

My list looks like this: washing machine, pill bottle, water pitcher, front door, nightstand.

So, let's say you have a Twine. Let's talk about how you might use it. I'll start, because I'm already talking and I enjoy hearing myself speak.

So, you put it on your washing machine, and what happens? The accelerometer goes off when the machine vibrates, right? Which means now your machine can "tell you" when its cycles start and stop.

Tape it to the bottom of your pill bottle. Accelerometer goes off when you pick it up to take a pill out, and now your wife knows if you're taking your medicine.

Place it in your water pitcher, accelerometer goes off when you pull it out of the fridge... but maybe that's not accurate enough. Maybe add a moisture sensor, tape it to the lid. Now you can track how many times you've refilled it so you know when to replace the filter.

Place it on your front door, the accelerometer goes off when someone knocks.

My exercise said, okay, you have one sensor, an accelerometer, and you have a physical device that you thought of earlier in the talk. What does that physical device do to make that accelerometer move, in any direction, and what can you infer from that?

And as people go around the room with single ideas, their ideas start getting more narrative as they warm up. There's more storytelling which lets you discover the real use cases, like, the specific movement of unscrewing a pill bottle cap, instead of any movement of the pill bottle. That's what the repeated slide with "Sociological" and "Cultural" on the left, and "Emergence" and "Storytelling" and "Narrative" on the right means. We put so much very local meaning into the definition and into our expectations that we have blinders on. We should be exploring these devices through a definition that allows for use to be emergent, and the way to do that isn't with giant rigid IoT concept worksheets, it's through storytelling around the fundamentals: sensors, uniquely addressed devices, smart or dumb network connectivity. And you can repeat the exercise with different sensors, or with combinations of sensors, or switching between types of connectivity, etc.

After that exercise, which ended with the assertion that you can go build any of those ideas today, using an old iPhone, I talked about the Skylanders game, which uses RFID, and then I talked about ARDX, the experimentation kit for Arduinos, which gives super newbie walkthroughs of playing with each individual sensor, and motor, and such: http://www.adafruit.com/products/170

Thursday workshop video (~30m)

Thursday workshop TOC (<5m)

- Conceptual model (fundamental definition)

- Conceptual framework (material exploration, speculative narrative)

- Constructive model (dataflows)

- Constructive framework (Generominos)

- Applied practice (LittleBits, Node-RED)

Thursday anti-TOC (<5m)

- History of the Internet of Things

- Other definitions of IoT

- Defunct or niche platforms, services, protocols

- Anything that requires programming or electrical engineering

- Historical demos and exercises

- Historical examples

- Self-evidence, tangibility, embodied cognition, epistemic actions

Fundamental definition (15m)

We're doing our initial framing in terms of IoT, but any work with sensors, like for an interactive art installation, smart home devices, etc., can be enabled by the same kind of thinking and the same exercises we're going to do today.

First do these:

- What sensors are you interested in understanding or in using in a design? Write down a few of them.

- What "Internet of Things" or smart home devices can you think of? Do you own any?

Then, talk about these:

- Nest thermostat

- Dash button

- Magic Band

- HomePod(!)

Provide the designer's IoT definition.

@vitor_io Yup. That's basically where I'm at too.

— Tom Coates (@tomcoates) August 14, 2012

The definition for "Internet of Things" in my framework starts by throwing out the existing commercial and industrial definitions. By their nature, they put expectations, aspirations, and social, moral, or commercial lenses onto something that doesn't inherent have those things.

As designers, we need the simplest, most fundamental definition, one from which all of the other properties can emerge. That definition is:

The Internet of Things means that real-world objects are addressable and connected.

Additional sensors are even optional, because if you can't reach a device, that still tells you something.

Connectivity I class as "smart" or "dumb", like mainframes versus workstations. Either it can instantiate a connection on its own, or something else has to request a connection of it.

A ”smart” connection is one where the physical device -- whatever it may be, an iPad, a toy figure, a pair of cat ears -- has the ability to make a connection of its own. It doesn't need to be polled, it can push data elsewhere on its own accord.

A "dumb" connection, then, is one where the physical device cannot make a connection on its own. Another device has to poll it, has to request, maybe even has to power it.

Note that we're only discussing the connective ability of a uniquely addressable real-world object, here, not computing power. This is important, because nothing in our definition absolutely requires onboard smarts, or onboard power, or interfaces, or anything. Many of those things are emergent from our basic definition, but they are not required by it.

Review the Nest, Dash, and Magic Band in terms of the definition.

Review things from each person's list of IoT devices in terms of the definition.

Material exploration (15m)

Everyone take our your phones.

Everyone open the camera app.

Everyone take a picture of me.

What sensors were in use there?

- Camera (imaging sensor)

- Touch screen (capacitive multi-touch sensor)

Why do I call a camera an imaging sensor? Because your use of that sensor to take a still image is contextual. The imaging sensor is capable of more than that, and the data you can extract from it is ever-expanding.

Did you have to unlock your phone to get to the camera?

- Fingerprint sensor (biometric sensor)

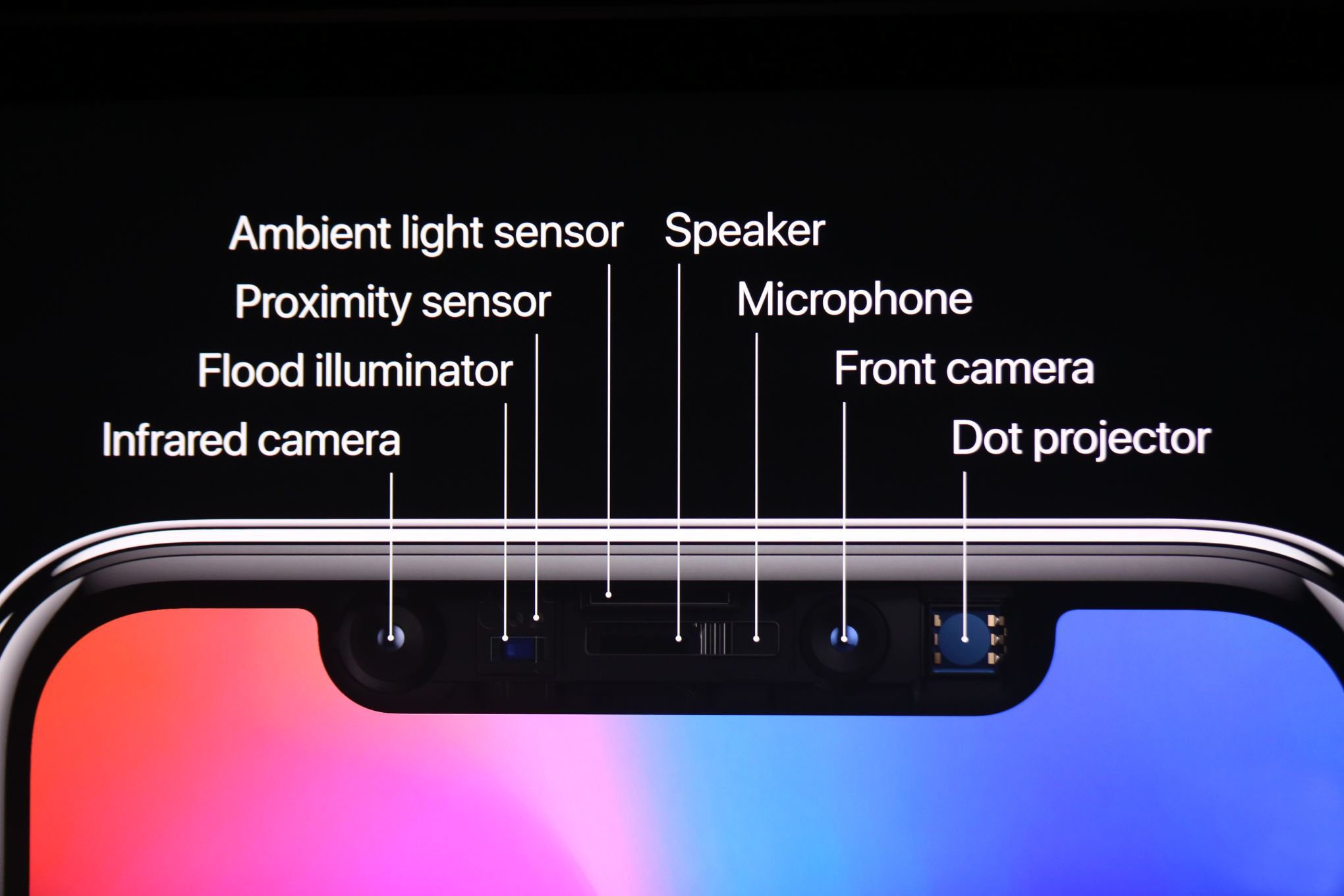

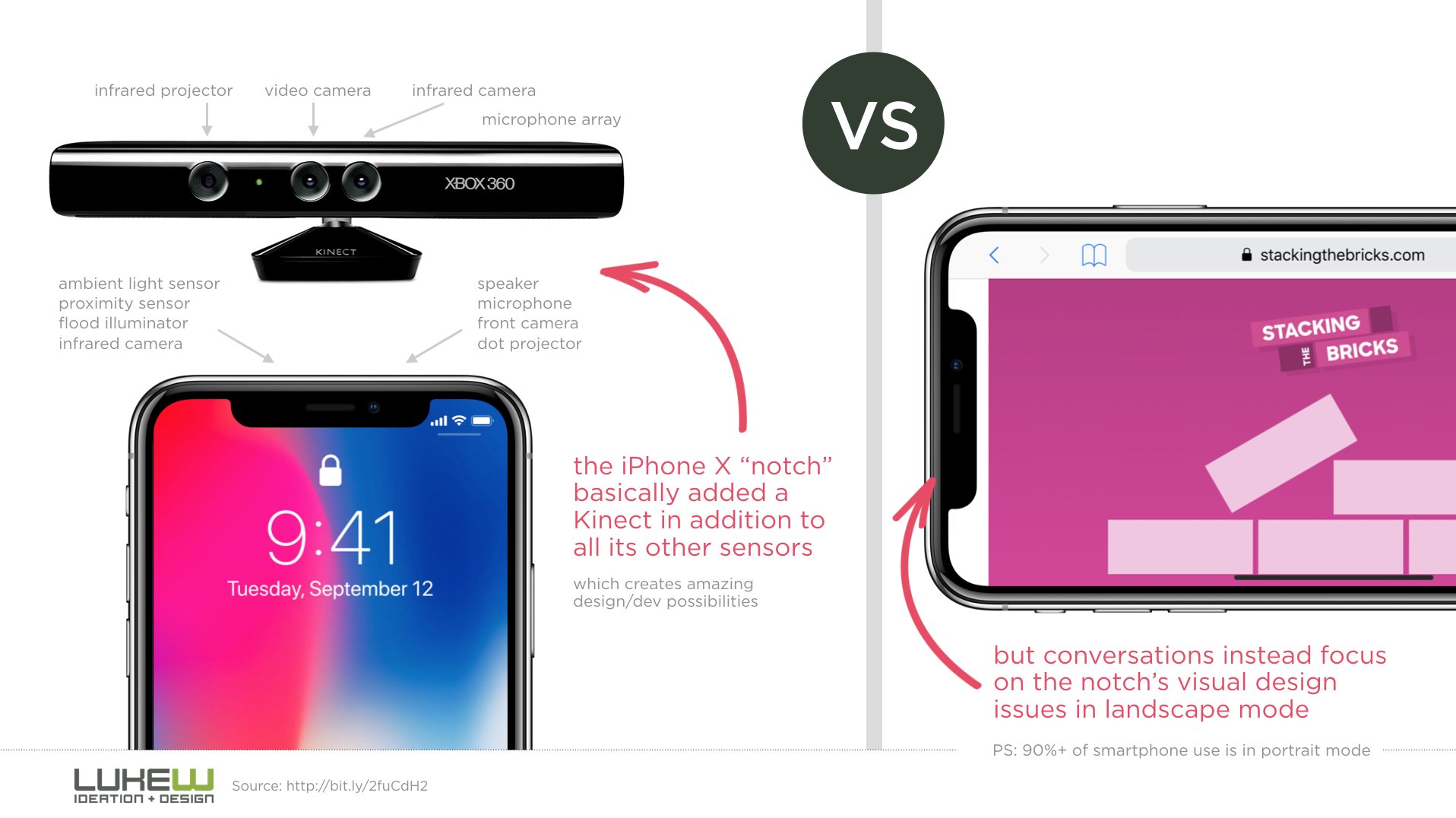

- Face ID (infrared imaging sensor, proximity sensor, ambient light sensor, microphone, front camera, infrared dot projector)

Without looking it up, what other sensors does your phone possess?

iPhone 2009: https://www.lukew.com/ff/entry.asp?828

Who thinks they've written down 100% of the sensors on their phone?

The conceptual framework is a basis for a materials exploration.

This is an in-depth exploration of the subject by exploring the properties, both inate and emergent of the materials at hand.

Now, you don't know enough about sensors to explore what one does, how it behaves, test its limits, etc. You don't have enough technical ability to buy a sensor, wire it up, program it, connect it to the internet, etc. I want to be clear that designing with and for sensors is a completely new material and a completely new skill set. Going from desktop to web or web to mobile is still dealing with 2D screens. This is something else.

But, that's okay, because as designers we're used to not knowing about our subject, that's why we do research, and do design studios, and do critiques. We're going to apply those here, to come to a conceptual understanding of a sensor and its potential application, and generate speculative narratives about those things, even if we might later learn in real life that it won't quite work out that way.

The prompt is:

What if I duct-taped my phone to a household object? What would it sense and when? What could I learn?

Think of five household objects. Five nouns. Write them down.

Here's mine:

- Washing machine

- Pill bottle

- Water pitcher

- Front door

- Nightstand

Let's pick a sensor, say, your phone's accelerometer, which detects motion.

So, you put it on your washing machine, and what happens? The accelerometer goes off when the machine vibrates, right? Which means now your machine can "tell you" when its cycles start and stop.

Tape it to the bottom of your pill bottle. Accelerometer goes off when you pick it up to take a pill out, and now your wife knows if you're taking your medicine.

Place it in your water pitcher, accelerometer goes off when you pull it out of the fridge... but maybe that's not accurate enough to track genuine usage. Maybe you have to combine it with your orientation sensor so you can sense tilt. Or maybe you have to tape it to the lid, to sense refills, a proxy measure.

Place it on your front door, the accelerometer goes off when someone knocks.

Quick, let's go around, pick one sensor, pick one object, what can you learn?

Now, the last time I delivered this workshop to designers, that's where we ended. We just went around, building on those conceptual narratives. If you get together after this over lunch and just brainstorm this very activity, you'll quickly find your narratives and your sensing gets more interesting, more detailed, more subtle. That's the emergent, narrative, storytelling, contextual framework here. In 2012, there wasn't any way for non-technical designers to take their ideas any further.

It's 2018, there are three new opportunities available to us, which means we no longer have to stop there.

Dataflows (5m)

In the 1970's, a guy at a bank invented the idea of defining applications not as file after file of code, but as "black boxes" of self-contained functionality, where all you know is what each black box expects as input, and what it generates as output, and you connect the boxes to each other to make your application, accomplish your task, etc.

If you've ever made a flowchart, it's kind of like that.

If you've ever diagrammed an application flow, it's kind of like that.

If you've ever done a domain model, it's kind of like that.

If you've ever done a journey map, it's kind of like that.

You are taking components with defined inputs and outputs, and connecting them to each other to define a larger system.

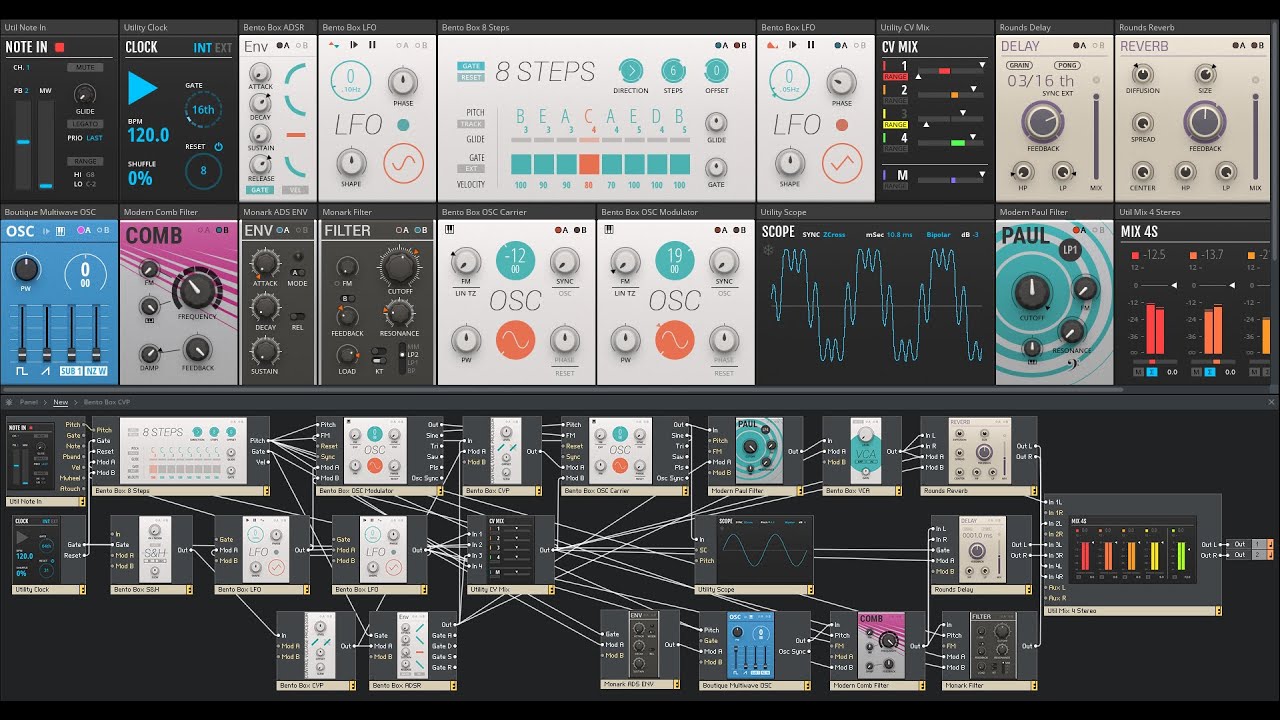

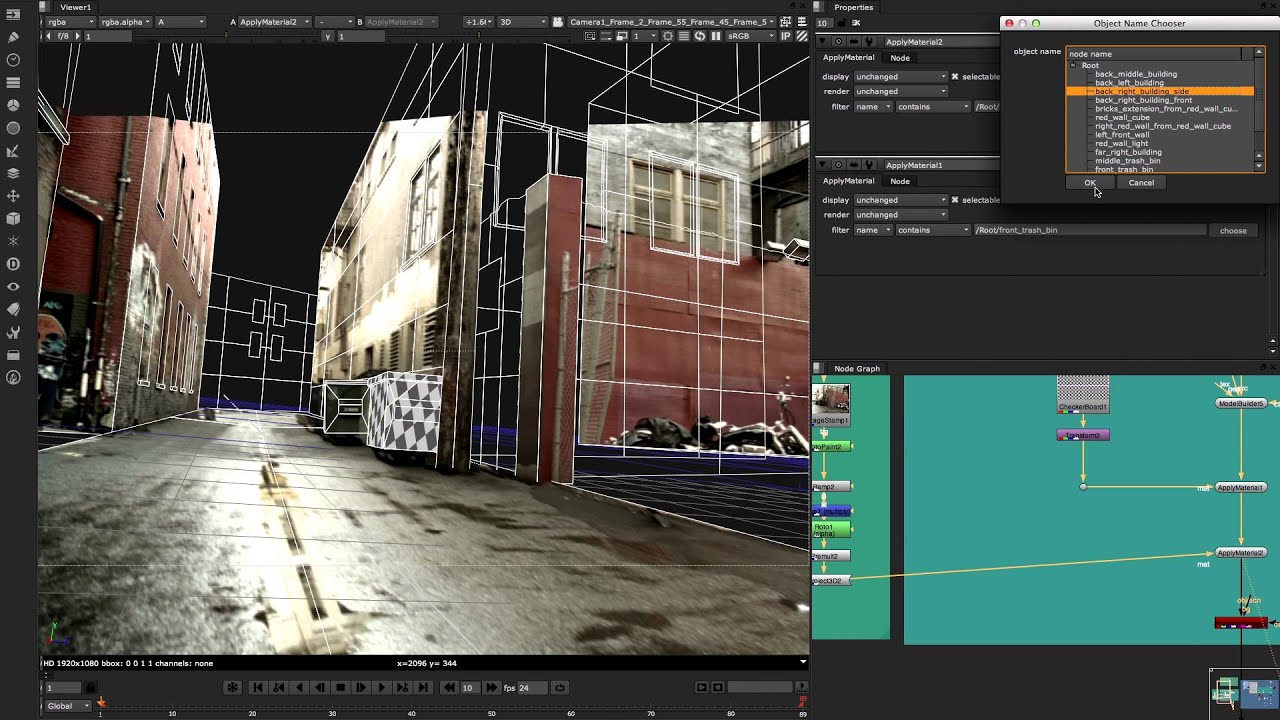

This paradigm, also called flow-based programming, node-based, dataflows, or visual programming, is used everywhere. You've probably seen it before without realizing what it was.

It's used in music production, such as in this screenshot of Reaktor, where the UI controls on the top affect the audio as wired up in the patches below.

It's used in video production, such as in this screenshot of Nuke, where video footage, 3D objects, lighting, and more are composited together based on the nodes wired up on the right.

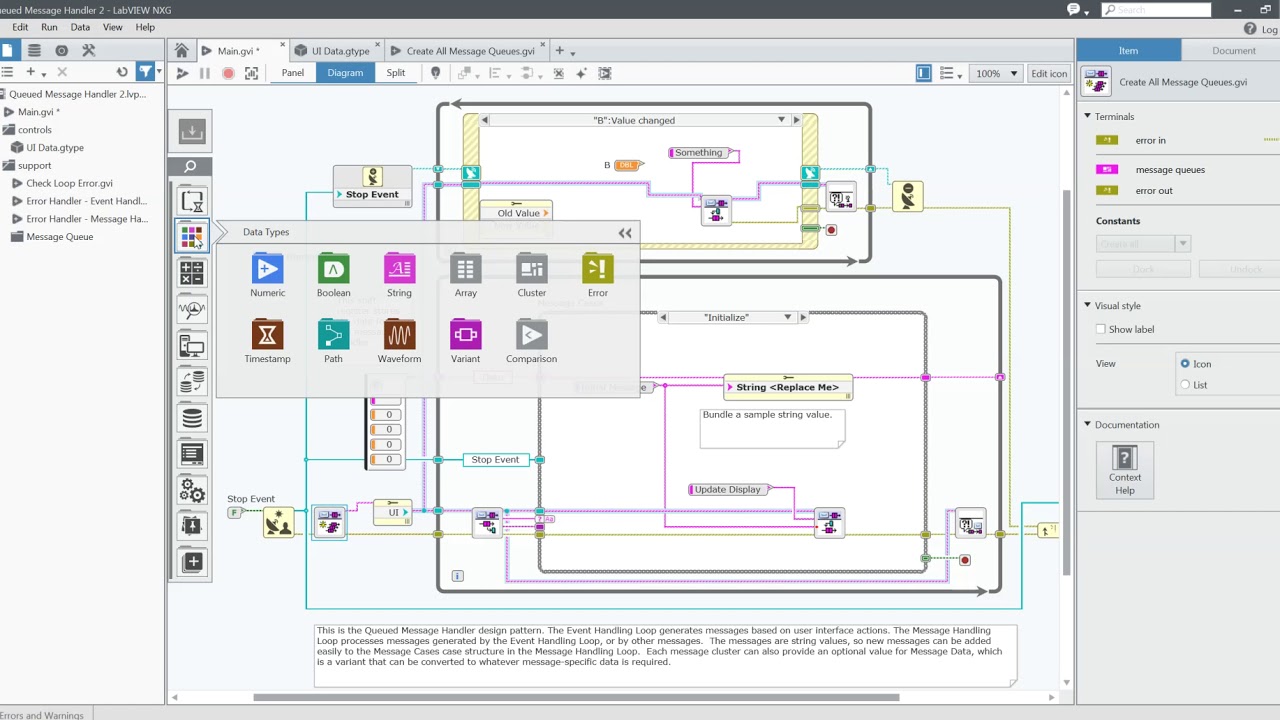

It's used in electronics, such as in this screenshot of LabVIEW, made at National Instruments down the street, to allow hardware engineers to design and test electronics without software programming.

It's used in video games, such as in this screenshot of Unreal Engine 4, which uses its "Blueprints" system to allow artists to build not only interactions, but entire video games, without programming.

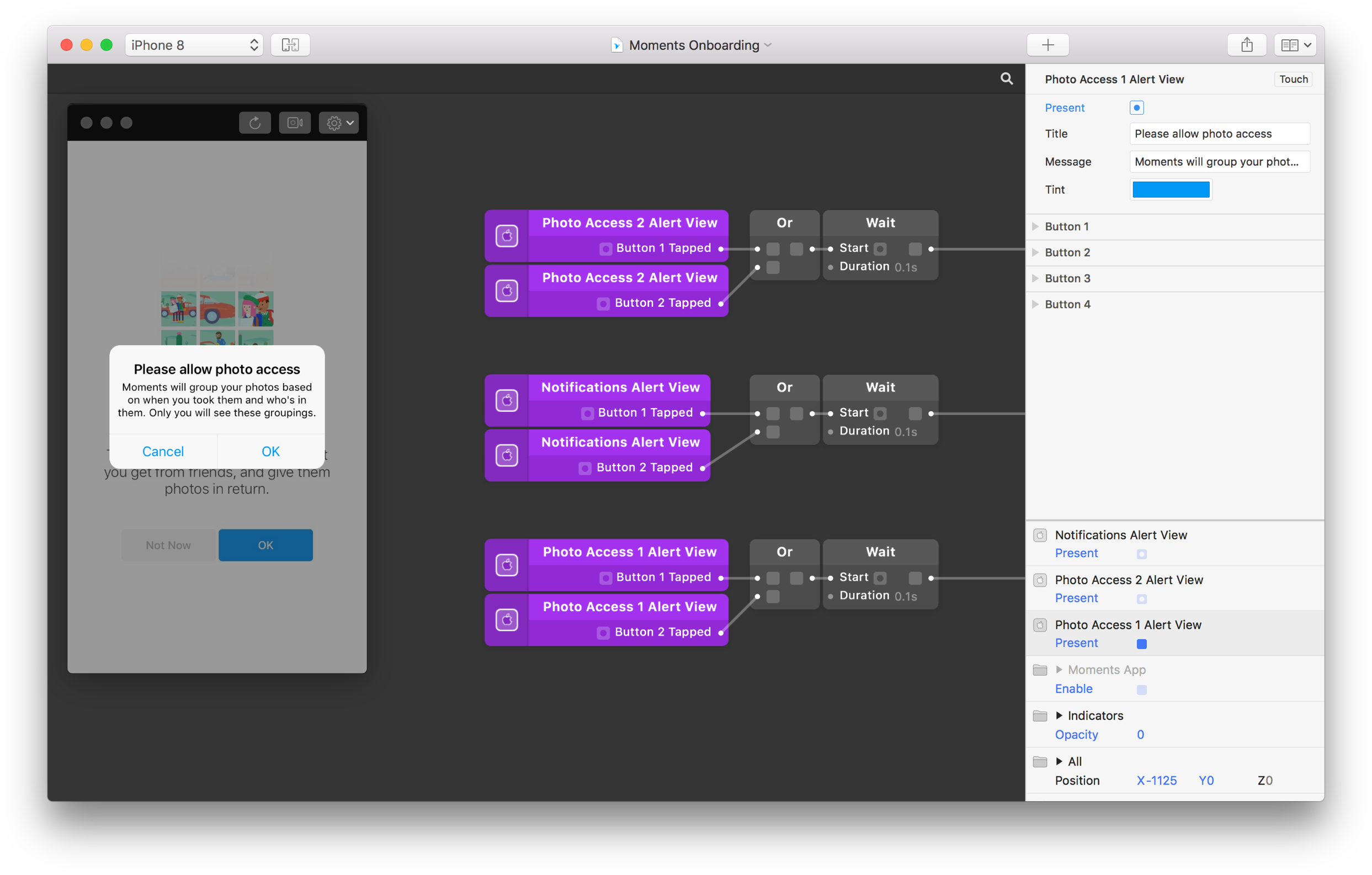

It's used in interaction design, such as in this screenshot of Origami Studio, which uses patches to define components and wire up interactions for interactive prototypes.

In programming, building dataflows forces you to be very explicit about inputs, outputs, and behavior, because you don't know in what larger context your black box will be used.

In design, thinking in terms of dataflows allows you to bridge the gap between a conceptual exercise, and what would actually be necessary to make your idea real.

Generominos (5m)

Generominos is the first reason I'm able to extend this talk. Published late last year, Generominos is a constructive framework for representing interactive systems as dataflows. It's designed for interactive art and games, so it doesn't have all of the sensors or outputs even embodied in your phone, but it has a lot, and there are around fifteen blank white cards in each deck for you to add your own with.

The solid background cards with borders are prompts, called scenario cards, we'll set those aside for now, but you can play with them later.

The cards with the blue bar on the top are input cards, and some of them specifically have a "sensor" icon. At the bottom of each card is a type of output.

You process that type of output one or more ways to make it useful, either intrinsically, or to transform it further, using a conversion card, which have no bars, icons at the top and bottom, and a solid background color.

The final output is a card with a red bar at the bottom.

In our accelerometer example from before, you'd take an "accelerometer" sensor input, which sends a squiggly datapoint line as output.

We want to register only when a certain measurement or range of measurements happen, so we'll convert that using the "threshold value" card, which now gives me enter, exit, and in-threshold events.

An event is great, but I need, like, an email, or a description to go into a database, so I'll convert the event into text using the "generative text" card.

And because this is meant for art, there isn't a "send an email" or "text me" output card, so I made one. Send an SMS to a preconfigured phone number, it says. Why did I specify preconfigured? Because if I needed to get the phone number from somewhere, I'd need a dataflow that received that input and processes it and pulls it in here.

Constructing dataflows forces you to think through what was previously a speculative narrative and get a more specific, more detailed understanding of how sensors function and how interactions are possible.

What do you do when there isn't a Generomino card for the sensor or process or output you need? You make one after doing your research. A card in this constructive model that isn't based on reality is like a persona written based on assumptions. It's going to fall down when you try to implement it for real.

You have examples of sensors and data types in these existing cards. Look up those sensors on the internet first and understand where they're getting that information from. Apply that to your new sensor card. Discuss it in a group. Talk to subject matter experts. Critique it. And, when you're done, let me know, and I'll help you contribute it back to the official Generominos card set.

Where do you find sensors? To start, you can browse maker-friendly electronics sites like SparkFun and Adafruit. They sell things in single unit quantities to individuals, and explain things a lot more thoroughly than a larger electronics vendor would.

But, remember, too, that some technology will take years to trickle down into maker-friendly shops. Today, that's technology like Lighthouse tracking for mapping physical objects in VR.

It doesn't mean you can't speculate on designs using it, it just means you may have to learn to read technical datasheets and talk with SMEs to make sure you're understanding it right.

Paying attention to new and emerging technologies in various fields, and talking with SMEs, can help you keep current.

LittleBits (5m)

LittleBits is the second reason. LittleBits is an electronics kit, which requires no electrical engineering and no programming. Every sensor, converter, or output comes as a standalone "bit", with no manipulation necessary. Everything snaps together with magnets, and you can't screw anything up.

It uses a dataflow paradigm, but embodied physically. Just like in Generominos, bits are defined as being inputs, outputs, or "wires", which would be your converters. Every bit has directional arrows on it, showing you the input-to-output flow. The magnets help, too, since they prevent you from snapping things together in the wrong direction.

Now, LittleBits existed in 2012 when I gave this talk the first time, but it was a tiny, niche product, I don't even think they had received their series A round of venture capital funding yet. There was no guarantee they were going to stick around. In 2014, they released their CloudBit, which made them suddenly useful for prototyping Internet of Things devices. And late last year, they pivoted a bit, instead of only selling kits that required you to have a certain amount of imagination, they released their first branded, themed kit, where you build a Star Wars droid, powered and controlled by LittleBits inside. Just like how kits of Star Wars ships or Harry Potter playsets revitalized Lego, I think this means LittleBits is going to stick around.

Here's an example of a Dash Button, made in LittleBits.

The catch with LittleBits is that, if an engineer is comparing them to the bare electronic part, they're an order of magnitude more expensive.

It's like a chicken dinner. If you lived on a farm, the marginal cost of going out back and grabbing a chicken and cooking and eating it is effectively zero. If you go to HEB, though, you'll pay a dollar a pound for the chicken, and you'll still have to cook it. If you go to a nice restaurant, you'll get your order taken, and be served by a waiter, and get real silverware, and it'll be on a plate, but you'll pay $20.

If you're making a professional electronic device with a big team, an ambient light sensor might cost you pennies, basically nothing. In single units, it might cost a dollar, but you still have to solder it and program it yourself. The LittleBits ambient light sensor requires nothing of you, and so costs $11.

This is the tradeoff you make when you specialize as a designer. I don't believe designers should have to code, I don't believe you should have to learn electrical engineering. You should be able to design with and for sensors, and prototype Internet of Things devices, without coding or engineering. This is how you get to do that.

You are paying to not have to learn electrical engineering, software programming, build an electronics workbench in your garage, stock it with parts, etc., etc.

All that said, we don't have any LittleBits here.

But, you can think about them in the same way you use Generominos.

These are cards showing many of the LittleBits available. Each is color-coded just like a real LittleBits bit is, and describes the inputs and outputs of the sensor or converter or output, and each shows an example of a real-world use. You can learn from them, and be ready to make a real device when you buy the LittleBits kits or the individual bits.

You could also use them to inform making new Generominos cards.

LittleBits also provides educational materials for classrooms, including invention manuals and project ideas. These offer more detailed descriptions of a smaller number of bits, but include the useful reference of real-world analogues, which I think are super helpful to understand how sensors work and can be applied.

Coming up with real-world analogues for all the Generomino cards would be neat.

Also in there are examples of how LittleBits describes the "invention cycle," which you'll see isn't too different from the design iteration process as we understand it.

Taking a conceptual narrative and turning it into a Generominos dataflow, and then turning that into a LittleBits dataflow, means you can actually begin to physically prototype your hardware and sensor interactions and ideas.

Node-RED (5m)

Node-RED is the third reason I can deliver an updated workshop today. Node-RED is a flow-based programming environment. It provides a browser-based editor that lets you construct a dataflow, from inputs, through transforms, and into outputs. Node-RED calls the black boxes "nodes," and the flows are, well, flows.

Node-RED is written in JavaScript, and it started as a research project in IBM in 2013, and was made freely available that year. Last month, a developer in Munich named Wolfgang Flohr-Hochbichler wrote some Node-RED nodes for the sensors we have attached to our Raspberry Pi, so now you can use them, without programming, just like we've been talking about.

A Raspberry Pi is a small, inexpensive computer. It's about as powerful as an old cell phone, but it supports attaching add-on boards which add inputs, sensors, and outputs. The one we have attached to ours looks like this:

This is the Rainbow HAT, an add-on board with these inputs:

- three capacitive touch buttons

- temperature sensor

- pressure sensor

and these outputs:

- red LED

- blue LED

- green LED

- seven RGB LEDs

- four-character alphanumeric display

- a buzzer

Where LittleBits allow you to snap together individual physical components, swapping bits out for different uses, the non-programming, non-engineering Raspberry Pi paradigm is to have one single add-on board with all the inputs and outputs you need, and you configure and reconfigure them in software. (There are other ways to use a Raspberry Pi, but they require programming, electrical engineering, or both.)

In this setup, you plug the Raspberry Pi into power and ethernet, you log into it and start up Node-RED, you drag and drop your nodes into a flow, you deploy it, and it's alive.

Here's a flow that works like those annoying buttons on Brenda's desk, responding differently depending on which button you press.

Here's a flow that works like an Amazon Dash button, sending a notification to my phone via IFTTT.

If you wanted to make a feedback system so people could rate you Good, Neutral, or Poor, and it notifies you over the internet, you could do it with three of these LittleBits setups, or one of these Raspberry Pi setups.

Just like there are all kinds of different LittleBits bits, there are different Raspberry Pi HATs.

This is a Sense HAT, used for student experiments on the Raspberry Pis on the International Space Station. It has these inputs:

- five-button joystick

- gyroscope

- accelerometer

- magnetometer

- temperature

- barometric pressure

- humidity

and the following output:

- 8x8 RGB LED matrix

It also is configurable through Node-RED.

A Raspberry Pi kit with everything you need, plus one of these HATs, will run you around $100.

I do recommend keeping your Raspberry Pis single-use. If you want to play with two different HATs, buy two Raspberry Pis. Swapping add-on boards can be as risky as replacing parts in a computer. These are bare, unprotected circuit boards we're talking about, at risk of shorts and static electricity. I have fried boards before.

Documentation for Node-RED is sometimes not-great, but in theory any programmer that knows JavaScript can dig into the code and explain how it's supposed to work. One of the project's goals for this year is to grow their community, and so any active and interested users can have an outsized influence.

Fin (<5m)

- Conceptual model (fundamental definition)

- Conceptual framework (material exploration, speculative narrative)

- Constructive model (dataflows)

- Constructive framework (Generominos)

- Applied practice (LittleBits, Node-RED)